What is the article about?

When we started 'Lhotse', the project to replace the old, monolithic e-commerce platform of otto.de a few years ago, we chose self-contained systems (SCS) to implement the new shop: Instead of developing a single big monolithic application, we chose to vertically decompose the system by business domains ('search', 'navigation', 'order', ...) into several mostly loosely coupled applications. Each application having it's own UI, database, redundant data and so on. If you are more interested in the 'how' instead of the 'why', please have a look into an earlier article on monoliths and microservices.

Lately, some of these SCS turned out to be still too large, so we decomposed them by extracting several microservices. Because we are already running a distributed system, cutting applications into smaller pieces is now a rather easy exercise. One of the reasons, why I agree with Stephan Tilkov that you should not start with a monolith, when your goal is a microservices architecture.

This article is not about the pros and cons of microservice architectures. This article is mostly about the pros. Not because they do not have downsides, but because I'm biased and completely convinced that microservices are a great idea.

The following sections might give you some insights, whether or not microservices are for you - but keep in mind, that I'm not working for a company, that has implemented microservice architectures in lots of different contexts (btw, there are not too many people in the world, that have this experience), but only in one: A really large online shop, developed as a greenfield project, having millions of customers, sometimes more than 1000 page impressions per second, almost 3 billion € turnover per year and more than a dozen dev(ops) teams continuously working on the software.

Very often, you do not really have the need for a 'real' microservice architecture but 'only' for some (more coarse-grained) self-contained systems.

In my opinion, the concept of SCS is far more important than microservices, because it solves similar problems while being much more pragmatic in many situations. In addition to this, SCS are a perfect starting point if you later decide to introduce microservices. Most of the advantages I will mention in the following are also applicable to SCS.

Efficiency & Speed

Every developer is able to start up a microservice and hit a breakpoint in a debugger on a notebook within seconds. Try this with a ten-year-old multiple-million LOC monolithic application and you know, what I'm talking about.

It's not only debugging: You also want to develop test-first or at least have a good test coverage, right? If you are trying to do so, you will notice that it is extremely helpful if you are able to build and test your application within seconds. The smaller the code size of your application, the better and easier.

You might argue that a proper build and project structure is good enough to execute unit tests frequently and fast enough. But even then you will probably (hopefully) want to have integration and acceptance tests. You could surely rely on nightly builds or tests only running on CI servers to work around these kind of problems - but in this case you may have to deal with constantly broken build pipelines and far longer cycle times during development. Microservices make it much easier to establish test pyramids that are quick enough to be executed by every developer before changes are pushed to the VCS.

Continuous integration, delivery or deployment have a number of great advantages compared to more traditional approaches to releasing software. Especially if you are working with multiple teams on a single system it is much easier to frequently deploy a number of small, independent applications instead of a single, big monolith. The reason should be easy to understand: If you only have one application, all teams must be able to deploy their part at the same time. If your system consists of multiple applications, it is natural that you will find more days (or hours), where at least one of it is in a state to be deployed into production.

The following chart depicts the number of live deployments per week over the last two years. At the beginning of 2015, we introduced our first microservices. Over the year, we developed more and more of them. In addition, we learned how to continuously deploy these services fully automated after every commit. By doing so, we increased the number of deployments from ~20 to more than 200.

The red line shows the number of incidents we internally classified as "priority 1" or "emergency". As you can see (and against many people's fears), there is no correlation between the number of deployments and the number of serious errors.

At otto.de, every application is implemented by exactly one team and every application can be deployed independently because they are loosely coupled Consumer-driven contract tests (CDCs) are very helpful to ensure that a deployment of one service does not break other services.

There is no need to coordinate deployments between applications anymore. Developers deploy software on their own, or deployments are fully automated We are currently deploying multiple times a day, up to 250 times a week (continuously increasing). If you are able to deploy more or less every single commit into production within minutes, you are definitely gaining momentum. Every kind of development is accelerated. And the more often you deploy, the less risky your deployments become.

Also, interesting approaches like hypothesis-driven development are supported by quick develop-release-test-learn cycles. The shorter the time between development and learning, the quicker you will be able to learn from your mistakes - and successes.

However, the most important advantage of microservices with respect to efficiency & speed is that they are really easy to understand by developers in every detail. In contrast to a hundred-thousand lines of code (LOC) monolith, every new developer in a team is able to become productive within days or even hours. In my team, for example, it is very common for a new team member to deploy the first commit to live on the very first day (first-commit-to-live is a very interesting KPI by the way).

Being able to understand an application in every detail is also important in every-day development: if you have to fix a problem or extend the application, you first have to understand what you have to do. The larger the application, the more time you will generally need to change it, without breaking something important. Other way around: no developer on this planet is able to fully understand a million-lines-of-code application. Ok - at least I am completely unable to do so.

Microservices, on the other side, will help you to quickly find out what part of the system has to be changed, and how to change it without breaking anything. Just because microservices are small enough to be understood by people like me.

Motivation

Do you remember the last time you had the possibility to build a new system from scratch? For many developers, greenfield projects feel like heaven: You do not have to mess around with 'legacy' software, and you are able to use up to date solutions. You can develop a clean design and you are not restricted by old botched up code.

It's fun. It's motivating. You can try out new stuff. It's far more productive. And - because you are constantly improving your development skills - the new microservice will probably be better than what you have build before.

Because microservice are - well - micro, you will be able to have these feelings every few weeks or months. And it's not only the motivation because of building something new: it is also very interesting because every microservice is a part of a big, interesting architecture. And it is very motivating to solve problems every day in an very efficient way, without having to dig around years old crappy code.

Why should you care about motivation of developers? What's so bad about having them sift through legacy code? Well, in case you did't yet realize: Software development is an employee market. Great developers are not applying for a job in your company - your company has to apply for every single great developer. If you intend to get (and keep) really good developers - you have to do something. If you are frustrating them with boring stuff - well, good luck.

Scalability

Ok, I will keep this part short even if this is one of the most important topics, because I've already covered this in some earlier articles [german].

Technical Scalability

If you need to scale things (like software), you need to decouple these things according to different (the more, the better) dimensions:

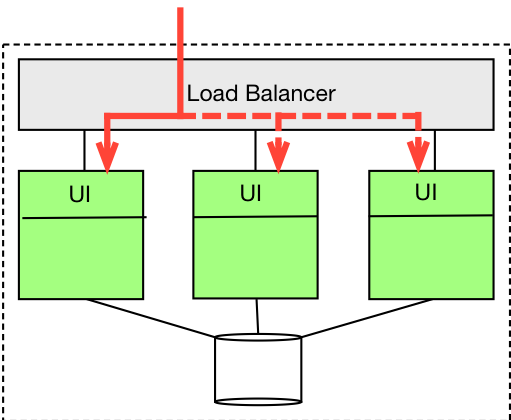

You could scale by cloning same things (aka load balancing): For example you could clone one application multiple times and balance load across these clones.

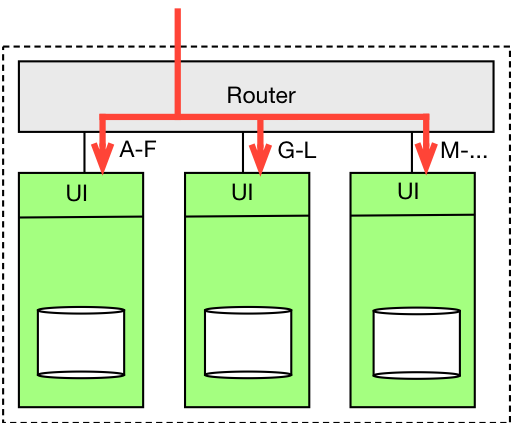

You could scale by grouping similar things (aka sharding): For example, you could have multiple applications being responsible for different data sets, or you could have multiple data centers being responsible for different user groups.

You could scale by separating different things: A system can be decomposed into multiple collaborating applications. This can be achieved by vertical decomposition (multiple mostly independent applications or logical units) and/or by splitting also horizontally (distributed computing).

A perfectly scalable system is using all three (in my opinion four) dimensions: it consists of multiple logical "verticals", each possibly consisting of multiple microservices. Every microservice may have one to many clones, so the load can be distributed over multiple processes. Sharding is used to distribute different kind of requests to multiple data centers or clusters using geo location or other shard keys.

In a microservice architecture, you already have two dimensions of scale: load balancing and distributed computing. This enables you to scale single parts of your system independently. In an online shop, for example, you would possibly have a few instances of a shopping-cart microservice, but more instances of a search microservice, because your customers will most probably search more often for products, than they are buying products.

The more services you have, the easier it is to scale single things independently.

Organizational Scalability

Especially in large systems, there is often the need to not only scale with respect to load or data: You will most likely also want to be able to scale with respect to the number of developers and other people.

If you need to have more than a few development teams, microservices will help you to scale your organization: Different teams can be responsible for one or more services. Multiple services and/or teams can be grouped by feature domains or other criteria.

The more services you have, the easier it is to introduce a new team. Even more important: development teams can be kept small autonomous and efficient.

Application complexity

Compared to monolithic architectures, the complexity of a single service is extremely low. Every single developer is able to fully understand a microservice in every detail.

Agreed, the overall complexity of a microservice architecture is high: but especially in the beginning, new team members will most likely concentrate on one or a few services. This is extremely helpful to get new developers productive on short notice. The interesting part is, that the high cohesion/loose coupling stuff is very helpful for everybody to understand, what a change to the software will do.

Because microservices are so small, there is almost no need to introduce a highly sophisticated internal architecture without too many abstractions, layers, modules and frameworks. Small services can be implemented simple and straightforward.

Just like classes, interfaces and namespaces do, microservices and verticals help you to structure your system. And as they are processes, it is at least difficult to (un-)intentionally bypass their APIs. This is why microservices will also help you to scale your application (or system) with respect to it's size and complexity.

Chances & Risks

In a monolithic architecture, it is very risky to rely on cutting-edge languages and frameworks. Today, using Java might still be ok, but it is already getting difficult to get 'great developers' if you have to stick to only this language. Many developers like modern languages. They like new technology. They most often dislike stuff that was modern ten years ago... But it's not only the motivation of developers. It is much more important to be keep your software up to date. Software is the DNA of modern companies and it is very expensive to develop this stuff.

Microservices give you the opportunity to use the 'best' tech-stack for every single use case. If you are choosing something that turns out to be not-so-perfect, it is easy, cheap and almost risk-free to replace a microservice by a better solution.

You can as well try out new languages, frameworks or new (micro-) architectures. Not because it's fun, but because you / your developers can learn from this experience - you will become a better developer.

Such kind of learning experiences is something completely different than any "hobby project" a developer can do in his/her spare time. Learning from code in production is the real thing.

Automation & Quality

One point about microserivces that seems to be a disadvantage in the beginning: You are forced to automate everything. Setting up new services, configuring services, firewalls and load balancers, deploying services, updating services, setting up databases, and so on, and so on. If you need to do such kind of things for every single microservice, people will go mad. You definitely need to automate such kind of tasks.

While initially being a hurdle, you will really take profit from automation. Your system will become much more robust, configuration errors will decline and manual work will be reduced to a minimum.

In my team, for example, all microservices are continuously deployed into production after every single commit (I'm partly lying: the oldest and biggest service is still different. But this one is not micro - and we are working on it). The deployment pipelines of the microservices are fully automated, without a single manual step. Not only this: also setting up and configuring a new microservice is automated. Setting up a deployment pipeline, too. Updating common dependencies to 3rd-party libraries is scripted, and so on.

The effort to let software do every-days tasks is initially high - but it's very rewarding. Currently it takes us maybe half an hour to create a new microservice including it's pipeline and bring it into production.

We are not managing bugs anymore: we are simply fixing them. If we have introduced a more complicated bug, we simply switch back to the previous version - and we mostly know, which commit introduced the bug. Now, our QA people are not managing bugs anymore, they do not have to test "releases", they do not even write regressions tests on their own (because developers do): Instead of this, they now have the time to bring in their expertise earlier into the process. They are helping product owners to write acceptance tests and they are able to pair with developers. More generally said, their primary objective is shifting from hunting bugs to preventing bugs.

Fault Tolerance

Microservices should be responsible for a single feature (or a few highly cohesive features). If a microservice is crashing, only one single feature is affected. Of course, it is not really easy to develop a system of microservices that is resilient to failures. But cutting a system into independent applications is at least very helpful, because processes are great failure units (aka bulkheads). Not only because today's operating systems are very robust against crashes of single processes, but also because developers are forced to access components using their exposed APIs.

In a monolithic application it is very tempting and easy to bypass (or break a module API and directly access internals of the module. Over time, even the best design will erode because of such shortcuts: accidental ones, and intentional ones (I'll fix it later...). From the perspective of fault tolerance, this may quickly become a real challenge, because after some time, it is extremely hard to understand, what kind of problems will cause what kind of errors. The more tangled your code is, the more likely it becomes, that some error will be able to tear down your whole system.

Using microservices instead of monolithic applications is very helpful to keep the loose coupling of your features. New and/or poorly implemented features will mostly have no impact on other features because they will run in a separate process, possibly on a different machine, using a separate database and code base.

There is no such thing as a free lunch. Only because you are choosing a microservice architecture, you will not get fault tolerance. But microservices will help you to implement a resilient system - and it will help you to stay this way over years.

Sustainability

This topic is - at least in my understanding - the most important one. Most of us forget that today's most up-to-date systems will be tomorrow's legacy applications. The stuff that you or people after you will swear about. Software that might prevent your company to survive future challenges.

Monoliths are bad design - and you know it If you are building some important application today as a monolith, some day you will inevitably have to replace your monolith by something new. Maybe in two years? Ten years? Thirty years? Some weeks ago, OTTO has replaced its stone-aged host system. Not because it was not working anymore, but primarily because it was almost impossible to find developers willing to develop a decades-old application - and because the existing developers were retiring one after another. Replacing this system was definitely no fun. The project took years it took a lot of money and it was highly risky.

In the last two decades, companies have build thousands of monolithic applications using J2EE and similar (already today partly outdated) technologies. Sometimes hundreds in a single company. How big do you think is the chance that these applications will still be adequate in ten or twenty years from now? In a few years, the todays mediocre solutions will be much worse than any host system from the seventies. Companies will go out of business just because of the software, that we are still developing today.

Some months ago Oliver (my former boss) said something very optimistic about the current otto.de platform: "we will never again have a project to reimplement otto.de". He was probably right: While we might have to replace every single bit of the current software in the following ten-or-so years, we may have no need to have an incredibly risky project to replace everything in a single step. Maybe, we will see dozens of larger efforts to replace parts of it. But we should not face a need to replace really large parts of it.

In the first place, we are building software to solve today's problems. Sometimes, we are also able to build software that is the foundation to solve tomorrows problems. But if software really is the DNA of your company, and if your business will have to change as rapidly as it is today - then you definitely need to find a software architecture that is sustainable over years and decades.

In my opinion, this is by far the most important aspect of microservice architectures: If you get it right, you have the chance to build a system that will never be the reason why your company will be unable to adapt to changing requirements. You will have to continuously work on your software - but you are able to do so in relatively small, affordable and risk-free steps.

0No comments yet.

Written by

Similar Articles

- Team JarvisDecember 17, 2025

Learning to Rank is Rocket Science: How Clojure accelerates Our Machine Learning with Deep Neural Networks

015Learn how OTTO uses 🖥️ Clojure to build robust machine learning pipelines for learning to rank 🚀 and improves the search experience.DevelopmentMachine learning - Team JarvisNovember 04, 2025

Learning to Rank – To the Moon and Back(-propagation): Deep Neural Networks That Learn to Rank What You Love

015Learn how OTTO optimizes search relevance using Learning to Rank – and the role Deep Learning plays for our Data Science team.Development