What is behind LLMs like ChatGPT?

Ever wondered how large language models like ChatGPT or Claude really work? You’re not alone! The buzz around large language models (LLMs) is growing fast — and for good reason. These language models are the backbone of today’s AI and are changing the way we search for information, communicate, and work.

In this article, we explain how an LLM works, what training them involves, what their strengths and weaknesses are - and why these technologies are poised to shape the future of communication. This explanation is based on the insights of Andrej Karpathy, a leading AI researcher and co-creator at OpenAI, Tesla and Google.

What is a large language model (LLM)?

A large language model is an AI system trained on massive amounts of text data to understand, process, and generate texts. Well-known examples include:

- ChatGPT (OpenAI)

- Gemini (Google DeepMind)

- Claude (Anthropic)

These models identify patterns, grammatical structures, and semantic meaning in language. They “learn” by analyzing billions of text passages, extracting underlying rules and relationships. The goal is to generate responses that are statistically likely, relevant, and coherent for any given text input.

The LLM as a Compressed Knowledge Archive

Andrej Karpathy describes an LLM as a kind of “zip file of the internet”. In other words, an LLM holds a compressed, statistical snapshot of the world’s textual knowledge – up to the point it was trained. However, since this knowledge isn’t always up to date, modern language models are increasingly combined with web search capabilities to access real-time information.

Strengths and Weaknesses of Large Language Models

LLMs are incredibly powerful – but far from perfect. Karpathy uses the metaphor of Swiss cheese: the model performs impressively in many areas, but still has “holes” –unexpected weaknesses.

Strengths

- Versatility in language and style

- High-level natural language processing capabilities

- Broad general knowledge (as of the training cutoff)

- Applicable in a wide range of use cases: content creation, customer support, data analysis

Weaknesses

- No guaranteed factual accuracy (“hallucinations”)

- Outdated knowledge without internet access

- Failure on surprisingly simple tasks

- Not truly “intelligent”—just statistical pattern prediction

Thanks to ongoing reinforcement learning, better data sources, and human feedback loops, LLMs are improving at a rapid pace.

How Is a Large Language Model (LLM) Built?

Phase 1: Pretraining and Tokenization

In this initial phase, the model is trained on massive amounts of text data from the internet. These texts are broken down into tokens—the smallest meaningful units of language. The goal: to train the model to predict which token (word or phrase) is statistically most likely to come next.

The result of this tokenization and pretraining phase is a model that can generate coherent text based on the patterns it has learned from data.

Example: Given the prompt “The weather today is very ___”, the model might predict completions like nice, warm, or rainy, based on context.

Reasoning abilities—developed during pretraining and refined through various training methods—allow an LLM to think logically, draw conclusions, and solve problems. These capabilities also enable the model to analyze its own output and identify areas for improvement.

Pro tip: If you want to dive deeper into how the neural network is trained, check out the video „Deep Dive into LLMs like ChatGPT“ starting at minute 15.

Phase 2: Supervised Fine-Tuning (SFT)

After pretraining, the model enters a fine-tuning stage where it learns from examples rated and curated by humans. This step teaches the model how to respond appropriately in real-world conversations. The aim here is to improve response quality—making outputs more relevant, safe, and accurate. Human annotators play a critical role by providing direct feedback, which becomes an essential part of the learning process.

Phase 3: Reinforcement Learning from Human Feedback (RLHF)

In the final phase, the model is further refined using reinforcement learning based on human feedback. It learns to favor helpful or high-quality responses while avoiding poor ones.

RLHF is one of the key reasons behind the impressive quality of today’s LLM.

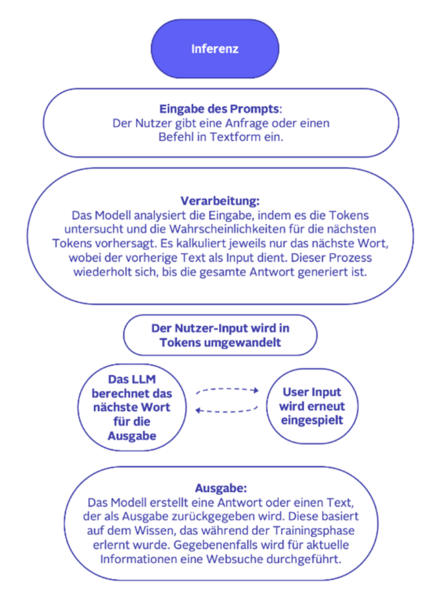

How Inference Works in a Language Model

Inference refers to the moment when a fully trained model responds to a new input:

- Input: You type a question or prompt.

- Tokenization: The text is broken down into individual tokens.

- Prediction: The model calculates the most likely next token.

- Output: The response is generated token by token—each word depends on the preceding context.

The model doesn’t “think” like a human. Instead, it follows probability patterns it learned during training.

Conclusion: The Future of Language Models

Large language models (LLM) like ChatGPT or Gemini mark the beginning of a new era in human–machine interaction. Their multimodal capabilities—combining text, images, audio, and video—and the constant stream of user feedback are making them more powerful by the day.

The range of applications is nearly limitless: from personalized assistants to automated content generation to data-driven decision-making in business.

Understanding how an LLM works today puts you ahead of the curve—whether you're a content creator, a developer, or a decision-maker navigating digital transformation. I hope this guide helped you gain a clearer understanding of how LLM like ChatGPT actually work! :-)

FAQ: Large Language Models (LLM)

What does LLM stand for?

LLM stands for Large Language Model, an AI system designed to process and generate natural language.

How does a language model learn?

Through pretraining on billions of text samples, supervised fine-tuning by humans, and reinforcement learning with human feedback.

Are LLM intelligent?

Not in the human sense—they simulate intelligence using probability but lack true consciousness or awareness.

Which LLM are out there?

Examples include ChatGPT, Gemini, Claude, LLaMA, Mistral, and many others.

How up-to-date is an LLM’s knowledge?

An LLM’s knowledge is limited to the point of its last training—this is known as the knowledge cutoff. Some models can access real-time information (e.g., through web search or database queries), but the LLM itself only contains knowledge up to its training date. These external sources are not part of the model itself.

Want to be part of the team?

Written by

Similar Articles

Aemilia und SvenjaJanuary 15, 2026

Aemilia und SvenjaJanuary 15, 2026Data-Driven Team Development – How OTTO Leads Tech Teams to Success

02What makes tech teams truly effective? How we use data-driven team development to measure effectiveness. ➡️ Explore the blogpostData scienceWorking methods- Team JarvisDecember 17, 2025

Learning to Rank is Rocket Science: How Clojure accelerates Our Machine Learning with Deep Neural Networks

015Learn how OTTO uses 🖥️ Clojure to build robust machine learning pipelines for learning to rank 🚀 and improves the search experience.DevelopmentMachine learning