What is the article about?

Although they've been around for a while, Large Language Models (LLMs) have taken the world by storm since ChatGPT was launched. Companies as well as open-source players are scrambling to stay abreast of LLM development, driving evolution in this field at breakneck speed. At the same time, a surprising number of everyday users have already made LLM support part of their daily lives.

ChatGPT currently dominates the AI landscape like no other model.

Could it also be used for the creation of good product texts in the OTTO Webshop? This is precisely the question we wanted to tackle in the inc(AI) team in a 2-week sprint. The result is ProductGPT – a tool that can do exactly that!

We received great support from the Content Factory team, who gave us all product data in the leisure suit category along with rules on creating formal product texts and further content.

We quickly got down to work In 2 subteams, Prompters and RuleCheckers.

Status quo

Right now, product texts for larger-scale product groups are already being created using NLG (Natural Language Generation) methodologies. However, this often triggers high costs and entails a significant synchronization effort with the third-party provider. This method is therefore only used for large-scale product groups, meaning that currently, texts for smaller product groups are still being painstakingly created by hand.

LLM-based approach

Our goal was to find out whether we could reliably generate product descriptions via ChatGPT, using only the eponymous platform and adding a few prompts. But first let's take a quick step back to define what ChatGPT and Large Language Models (LLM) in general actually are.

A Language Model (LM) is simply a probability distribution over word sequences. In particular, it can predict the next most likely word given a specific start to a sentence, a paragraph, or an entire text – in fact, it knows the probabilities of all possible next words. How would you complete the sentence: "As a new pet, I'd like a..."? We can train an LM with data so it learns to calculate the probabilities of continuing the sentence with "dog", "cat" or "hamster", but also with "table", "sky" or "orange".

With an LM, there's a very simple way to generate text: starting with a given input word, ask the LM to find the most probable next word and then automatically repeat the process regressively by adding the newly generated word to the input each time. Even the most cutting-edge Deep Learning approaches still rely on variants of this basic principle today.

For five years now, Transformer architecture has enabled AI models to scale much more efficiently regarding our existing computing resources. Scalability is given, too – both in terms of the complexity of the internal parameters governing how the model learns as well as in terms of the volume of learning data. With GPT3, we have discovered that when LMs become Large enough, new and unexpected capabilities of (L)LMs based on the same fundamental concept emerge.

On the one hand, the difference between 'normal' LMs and LLMs is the volume of the data corpus provided for training the AI model, and the hardware resources harnessed to do this (massive use of GPUs). And on the other, aided by human feedback (HF) the AI models are fine-tuned in such a way that a text assigned the highest possible score by humans is delivered as often as possible on a given prompt.

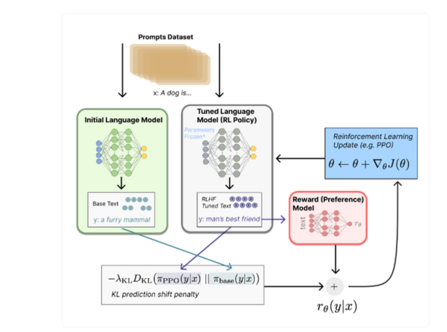

One candidate to help drive this fine-tuning process is Reinforcement Learning with Human Feedback (RLHF).

Pairs of the form (prompt, generated text, human rating e.g. based on the ELO score) are used to train a 'reward' model. As input this contains the prompt as well as the generated text and outputs a score on how closely the generated text matches the prompt. Aided by this model, the original model is fine-tuned using the reinforcement-learning method Proximal Policy Optimisation (PPO). The figure below illustrates this training procedure:

Figure 1: RLHF (Source: https://huggingface.co/blog/rlhf)

Steering an LLM

Key aspects for analysis were the steerability of an LLM via parameterisation as well as the interrelation between text correctness and controllability, and free and creative design.

The stochastic nature of LLMs makes 'text correctness' a demanding requirement. However, configuration options exist that can be applied to steer the model's behaviour.

Prompt Engineering has the largest influence on text generation and will be discussed a little later in this article.

Predictable or free-text generation?

Let's take a specific look at the essential nature of an LM:

- Can we look a bit further into the future generation process to ensure we always choose the best possible generation paths, accepting a small compromise to text-generation speed as a trade-off?

- Do we control the model to ensure it always uses the most appropriate and most probable next words – or do we also let it augment the text with less-probable next words, which might possibly deliver more creative results?

The first question quickly requires us to shelve our intuition in favour of technical expertise – and in fact, the corresponding options are not available in the newer OpenAI models.

Let's get to the second question: one of the most important parameters that we can configure by default is 'temperature'. But what does this parameter affect, exactly?

A higher temperature artificially mitigates divergences in the probabilities forecast by the LM between the most and least likely next words, to produce less predictable sentence continuations.

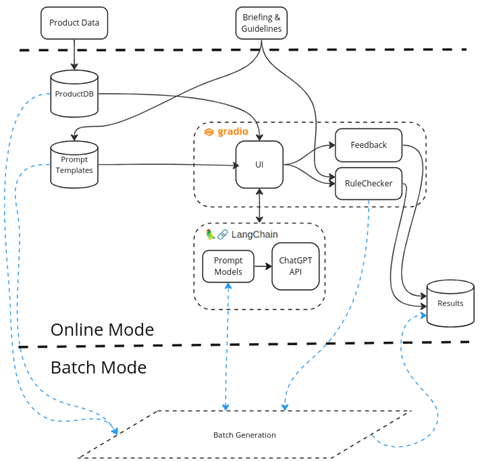

Architecture and software framework

The start point for generating product descriptions is the product data from the OTTO Webshop. To make product data usable for our application, it's transferred to an internal product database and pre-processed. Along with the briefing and the guidelines supplied by the respective specialist department, we now have our input data.

Prompt templates are created from the briefing and guidelines so the LLM can develop the corresponding product descriptions.

Additionally, this information provides the basis for the discrete rules governing our RuleChecker, an application that analyses the generated product text to check for compliance with the specialist department's product-text specifications.

This application has an online mode and a batch mode. Online allows interactive use as well as experimentation with different prompts and models, while batch is more suitable for the automated creation of several product descriptions.

In online mode it's possible to provide feedback on the generated product descriptions in text form.

At the end of the process the results are saved for later use.

Figure 2: Architecture

LangChain

LangChain is a framework for creating applications based on language models. LangChain offers numerous possibilities, ranging from simple queries to various LLMs, through to complex chains with agents. In our scenario we apply LangChain as an abstraction layer between our application and OpenAI's ChatGPT model, so we can try out other LL models later on.

Gradio

With the help of gradio, a Python-based framework for creating a WebUI, we were able to create an interface for interactive experimentation quickly and conveniently. What's more, gradio helps provide an attractive user interface particularly for ML applications.

Prompt generation

How do you give ChatGPT exactly the right instructions...? Although there are already mountains of papers and video tutorials on this, as soon as you get hands-on it's a real challenge!

We were able to provide the model with enough detailed input on leisure suits thanks to the purchase advice provided, with prioritised product attributes and USPs providing further input. In fact, in the end the prompt became so long that we regularly exceeded the 4,096 tokens available for the model prompt and response.

We can describe the structure of the prompt like this:

General rules

For instance, these rules include gender-appropriate language and the avoidance of certain linguistic devices such as superlatives or passive-voice formulations. They also establish how our customers should be addressed in text form: with children's products, for example, it's important to address the parents directly (e.g. "your kids").

Text structure

The generated text needs to conform to a given HTML structure, which should be filled with placeholders. We realised this with the following prompt:

'A product description consists of three sections and a final sentence. The structure is:

<h3>x1_head</h3>

<p>

x1_body

</p>

<h4>x2_head</h4>

<p>

x2_body

</p>

<h4>x3_head</h4>

<p>

x3_body

</p>

<p>

x4

</p>

This is followed by instructions for filling the respective placeholders.'

Prioritised attribute list

The core of the prompt is the list of prioritised product attributes to be mentioned and/or highlighted in the product description. We received the list from OTTO product databases, and attribute prioritisation was defined for us by the respective specialist department.

Specific information for each product category

There are technical terms and patented technologies for certain product categories (such as Dri-Fit or Bioshield) that the AI model does not usually know. To be able to highlight the advantages of these technologies in the product description, first we need to describe them to our model in detail. To do this we requested a list from the respective department; as soon as the technology name appeared in the product data, we extended the prompt to include the description of the technology.

Purchase advice for a specific product group

Purchase advice is particularly helpful for customers who are not very familiar with a specific product category. Among other things it highlights product characteristics that deserve special attention. This is ideally suited to helping LLMs expand their already good 'general knowledge' with detailed information for that specific product group, thus enriching the generated product text.

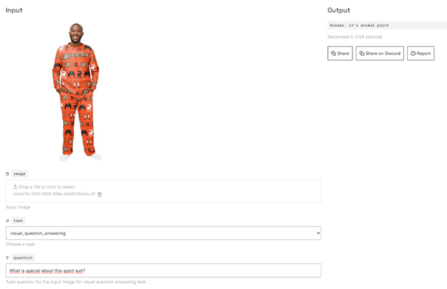

Visual information

We experimented the Visual Question Answering (VQA) model BLIP-2. VQA models receive a question as input referring to a specific image and then generate an answer. Our questions concerned unique features of the leisure suit and conspicuous characteristics of the material pattern. With products that particularly stood out in terms of colour and texture, the additional information provided via this procedure was very helpful.

With product categories that can be described more easily in visual rather than textual form, we hope to achieve even greater added value here.

Figure 3: VQA prompt and output for an eye-catching tracksuit on replicate.com.

Findings

Prompt Engineering can sometimes be frustrating because you're working in trial and error mode and don't receive any information about what's really happening within the model. Here's a summary of a few of our findings:

Inventing facts

ChatGPT invents data when required. For instance, this happens if the model predicts that the output will be longer than indicated by the facts that are input.

It therefore makes sense to let the model shorten the text and to instruct it that no facts must be invented. If we provide the model with enough facts (e.g. through a high number of product attributes), this problem crops up less frequently.

Limiting the number of tokens

There are 4,096 tokens valid for input and output – so if a prompt is too large, the output will be cut off. A 'word' consists of approximately 2 tokens.

At least with GPT 3.5, we are smart to limit ourselves to the bare essentials in our prompt. The token limit will presumably no longer be a factor in future models (GPT 4 onwards).

Reprompting

'Reprompting', i.e. supplementing the output with an additional request, often delivered poor results. For example, the prompt: "Write the same text in the same form, but address the customer formally" was interpreted as a request to write a letter.

This finding means that a 'one-shot' approach with good prompt, 'few-shot' (multiple prompting supplemented by a request to improve the last generated text) or progressive fine-tuning are very probably better alternatives.

Sequence within the prompt

Things placed at the end of the prompt are more likely to be fulfilled than those at the beginning.

"Illogical, Captain..." – thinking fast and slow

ChatGPT sometimes flips out because input items are pieced together incorrectly. For instance, when inputting 'added value' product features, it forgot that it was a leisure suit or romper suit for babies – and suggested wearing it to go jogging.

It gives us the feeling that we can address certain regions of the ChatGPT 'brain' (thinking slow) and free it from its 'fast thinking' imperative by telling it what to look out for, e.g.: 'Consider the target group and its needs.'

Repetitions/synonyms

Despite multiple instructions to avoid repeating words, the model still often broke this rule. We have not found a reproducible and effective solution for this so far.

Layout/structure with template

We were able to oblige the model to comply with the HTML structure specification (headline, paragraph, etc.) by specifying a template. Furthermore, it sometimes invents and adds a 4th paragraph.

Model parameterisation with 'temperature'

Increasing temperatures as a model parameter actually does generate more creative suggestions which move further away from the facts and evidence more complex sentence structures. In some cases, though, this creativity is not appropriate in product descriptions and we need to find a credible balance between fact-based texts ('low temperature') and creative language ('high temperature').

Clean master data

Although ChatGPT can handle grammatical errors, it is not so adept with incorrect master data. For example, 'sports fashion' and 'casual' are listed as appropriate wearing occasions, but these are in fact closer to product styles. In particular, the occasions recommended in the purchase advice do not match this (activities: leisure, outdoor activities, workout, home, romping, playing). A baby leisure or romper suit tagged with a 'sportswear' occasion is likely to confuse the model and make it suggest that babies go jogging and do sports. Inputting 'fitness' as an appropriate sport for a baby romper suit also trips ChatGPT up.

The description 'jacket and slacks with two pockets' was interpreted by the model as both garments featuring two pockets each.

Clearly, it's vital to write master data as unequivocally as possible and to make implicit knowledge thoroughly explicit when inputting the prompt data.

Rule-checking and evaluating text quality

To be able to assess the quality of the generated product descriptions, we apply a range of methodologies to check the text and log the results for subsequent analysis.

Plagiarism check

Plagiarism is a key issue and we want to avoid the LL model simply 'stealing' product descriptions. Many third-party service providers offer a plagiarism search. However, in the initial step we opted for a relatively simple mechanism: we take the individual sentences of the generated product description and Google them for matches on the Web. If the positive matches exceed a predefined threshold (e.g. 15%), we classify the text as plagiarism.

Layout

The briefing provided by the specialist department also comprises a description of what the product description text needs to look like in the end. This includes the length and number of text sections as well as the maximum number of words, for instance.

Structural/syntactic analysis

Aided by an NLP tool named Spacy, we take a closer look at the text. We make sure the text is written in the active voice and does not include superlatives, for example. The text also needs to address our target groups correctly (adults/adolescents/children).

Semantic analyses based on neural networks

Fulfilling the specific requirements of the specialist department such as: 'The paragraph header must match the text in the paragraph', or the use of stereotypes, requires a different approach. Only with methodologies that deal with the structural or syntactic content, checks like these are not usually qualitative enough. When we need to check the semantic content of a text, generally the only option is to apply specialised NLP models trained for a specific task.

We use a neural network such as the one provided by Hugging Face to check the semantic relationship between headline and paragraph. This model predicts whether there will be a 'match', a 'contradiction' or simply a 'neutral' semantic relationship between two chunks of text.

Using another specialised NLP model, also provided by Hugging Face, we can classify whether a text contains unwelcome stereotypes or potentially discriminatory text formulations.

Summary and outlook

Cost aspects

The GPT 3.5 turbo model we use costs $0.002 per 1,000 tokens, which is still relatively cheap – even for a large text volume.

However, if we want to move up to GPT 4 (only available to selected users at the time we implemented our model), the cost climbs to 0.03$/1,000 tokens (with 8,000 tokens as the context limit) or 0.06$/1,000 tokens (with 32,000 tokens as the limit).

Since we have little leverage on OpenAI's pricing policy, we naturally enter dependency here.

Possible improvements

The quality of the texts we are generating is already pretty impressive, but there's still room for improvement.

Further research in the area of Visual Question Answering can add major value to visually interesting product groups. Especially in the fashion sector, it helps us create textual descriptions that could not be generated by inputting product attributes alone.

Additionally, we see great potential in including customer reviews. For example, if there is relevant information available here, e.g. that a product looks or fits smaller or larger than advertised, we could input this information as a key criterion in our prompt.

Based on our own experiments, open-source Large Language Models are not yet able to compete with ChatGPT on text-output quality. On the one hand, ChatGPT was trained on an enormous data corpus of different languages, and on the other hand, effectively training a model like this requires extreme hardware resources. Fine-tuning an open-source LLM only makes sense if pre-training has already been carried out on a sufficiently large German-language corpus and the training can be completed with modest hardware resources.

However, we should keep a close eye on open-source developments, as we could then potentially host our model ourselves and obviate any dependency on third-party APIs.

Want to be part of the team?

0No comments yet.

Written by

Similar Articles

- Team JarvisNovember 04, 2025

Learning to Rank – To the Moon and Back(-propagation): Deep Neural Networks That Learn to Rank What You Love

012Learn how OTTO optimizes search relevance using Learning to Rank – and the role Deep Learning plays for our Data Science team.Development  MarcoJuly 30, 2025

MarcoJuly 30, 2025Open Source at OTTO: Transparency, Community & Innovation

04Find out how OTTO lives Open Source – for more transparency, developer satisfaction and digital sovereignty. Read more about our strategy now!Development