What is the article about?

When we began the development of our new Online Shop otto.de, we chose a distributed, vertical-style architecture at an early stage of the process. Our experience with our previous system showed us that a monolithic architecture does not satisfy the constantly emerging requirements. Growing volumes of data, increasing loads and the need to scale the organization, all of these forced us to rethink our approach.

Therefore, this article will describe the solution that we chose, and explain the reasons behind our choice.

Monoliths

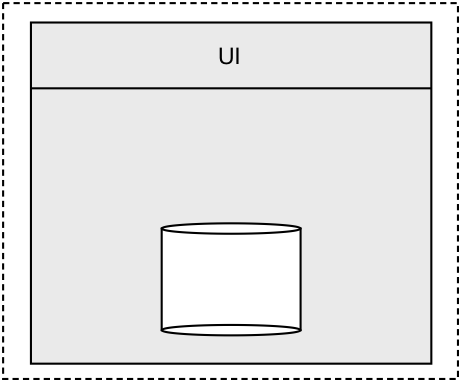

In the beginning of any new software development project, lies the formation of the development team. The team clarifies such questions as the choice of the programming language and the suitable framework. When talking about Server Applications (the subject of this article), some combination of Java and Spring Frameworks, Ruby on Rails or similar frameworks are the common choices for the software teams.

The development starts, and, as a result, a single application is created. At the same time, a macro-architecture becomes implicitly chosen in the process without being challenged by anyone. Its disadvantages come slowly to the surface during further development:

- It gives rise to a heavyweight Micro Architecture

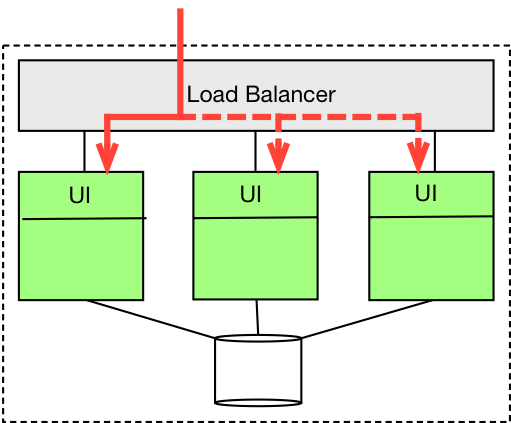

- The scalability is limited to Load Balancing

- The maintainability of the system often suffers in the case of particularly big applications

- Zero downtime deployments become rather complicated, especially for stateful applications

- Development with multiple teams is inefficient and requires a lot of coordination

...just to name a few.

Of course, no new development starts as a “Big Monolith”. In the beginning, the application is rather slim, easily extendable and understandable – the architecture is able to address the problems that the team has had up to this point. Over the course of the next months, more and more code is written. Layers are defined, abstractions are found, modules, services and frameworks are introduced in order to manage the ever-growing complexity.

Even in the case of a middle-sized application (for example, a Java Application with more that 50,000 LOC), the monolithic architecture slowly becomes rather unpleasant. That especially applies to applications that have high demands for scalability.

The slim fresh development project turns into the next legacy system that the next generation of developers will curse about.

Divide and Conquer

The question is, how can this kind of development be avoided, and how can we preserve the good parts of small applications inside the big systems. In short: how can we achieve a sustainable architecture that will after the years still allow for efficient development.

In software development, there are a lot of concepts around structuring the code: Functions, Methods, Classes, Components, Libraries, Frameworks and etc. All of these concepts share just one single purpose: help the developers to better understand their own applications. The computer has no need for classes – we do.

So, as all of us, software developers, have internalized these concepts, a question comes to mind: why should these concepts stay limited to just one application? What prevents us from dissecting an application into a system of several, loosely coupled processes?

Essentially, there are three important things to keep in mind:

- Conway’s Law: The development starts with a team, and in the beginning, the application is very understandable: at first, you have a “need” for just one application.

- Initial costs: Spinning up and operating a new application only appears to be an easy task. In reality, you need to set up and organize a VCS repository, build files, a build pipeline, a deployment process, hardware and VMs, logging, monitoring tools and etc. All of these concerns are often accompanied by certain organizational hurdles.

- Complexity in operations: Big distributed systems are clearly more complex to operate than a small load balancer cluster.

If we let things slide, we will generally not end up with a system consisting of small, slim applications, but with a big clunky monolith. And the biggest problems reveal themselves when it is already too late.

Normally, it is initially rather clear if a particular application has to be scaleable, and if a rather large code base is underway. It is then the case that when you come around the above mentioned obstacles, you either have to solve them, or live forever with the consequences.

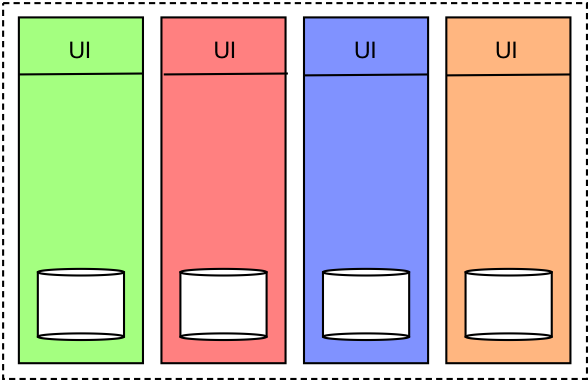

At OTTO, for example, it did pay off to establish four cross-functional teams very early in the process, and therefore to prevent the development from belonging to just one team. As a consequence, four applications originated instead of just one.

As we already had to operate a big system in the past, the complexity of operations seemed a solvable topic for us: in the end, there is little difference in operating 200 instances of a monolith or a similar number of smaller systems.

Initial costs of spinning up the new servers can eventually be overcome through standardization and automation. Since we are not referring to cloud services, you indeed have to do some groundwork at least once in order to set up this automation. But then, you can benefit from all this work for a long time in the future – a cost that pays off.

Scalability

So, how can we design a system made out of small, slim applications instead of a monolith? First, let’s remind ourselves in which dimensions applications can be scaled.

Vertical Decomposition

The vertical decomposition is such a natural approach that it can be easily overlooked. Instead of packaging all of the features into one single application, we disassemble the application into several applications that are as independent as possible of each other from the very start.

The system can be cut according to business sub-domains. For example, for otto.de we have divided the online shop into 11 different verticals Backoffice, Product, Order and so on.

Each one of these “verticals” belongs to one single team, and it has its own Frontend as well as Backend and data storage. Shared code between the verticals is not allowed. In the rare occasions where we still want to share some code, we do so by creating an Open Source Project.

Verticals are therefore Self-Contained Systems (SCS), as they are referred to by Stefan Tilkov in his presentation about Sustainable Architecture“.

Distributed Computing

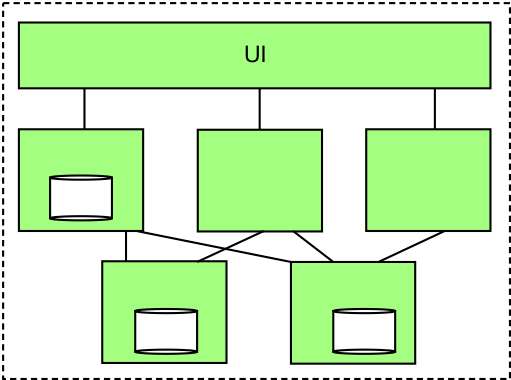

A vertical can still be a relatively big application, so one might want to divide it even further: preferably by splitting it into more verticals, or by disassembling it into more components through distributed computing, where the components run in their own processes and communicate with each other via, for example, REST.

In doing so, the application is not only split vertically, but also in a horizontal way. In such an architecture, requests are accepted by services, and the processing is then distributed over multiple systems. The sub-results are then summarized into one response and sent back to the requestor.

The individual services do not share a common database schema because that would mean tight coupling between the applications: changing of the data schema would then lead to a situation where a service can no longer be deployed independently from the other services.

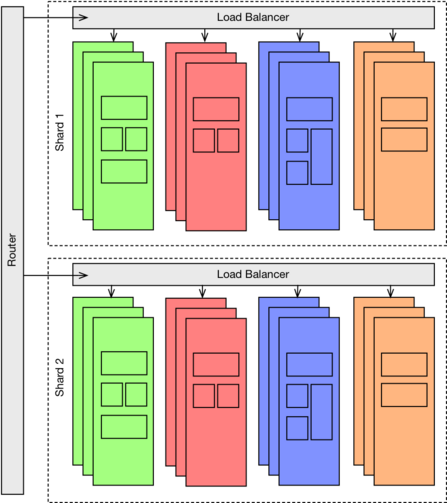

Sharding

A third alternative for the scaling of a system can be appropriate when very big amounts of data are being processed, or when a decentralized application is being operated: for example, when a service needs to be offered worldwide.

However, as we are not utilizing the concept of sharding at this moment, I am not going to go into any further detail at this point.

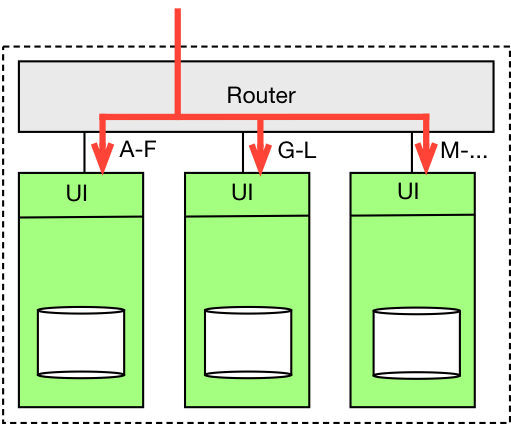

Load Balancing

As soon as the single server cannot process the load anymore, load balancing comes into play. An application is then cloned n-times, and the load is divided via a load balancer.

Different instances of load-balanced applications often times share the same database. This can therefore lead to a bottleneck, which can be avoided by having a good scalability strategy in place. This is one of the reasons why NoSQL DBs have established themselves in the software world in the recent years, since they can often deal with scalability in better ways than relational DBs.

Maximum Scalability

All of the mentioned solutions can be combined with each other in order to reach almost any level of scalability within the system.

Of course, this can only be means to an end. When you do not have the corresponding requirements, the end result can become a little bit too complex. Thankfully, one can take small steps in order to bring themselves towards their target architecture, instead of starting with an enormous plan from the very start.

As an example, at otto.de, we started with four verticals plus load balancing. Over the last three years, more verticals came into being. Meanwhile, some grew to be too big and bulky. So currently, we are in the process of introducing microservices and extending the architecture of individual verticals in order to establish distributed computing.

Microservices

The term microservice has grown very popular recently, especially when talking about the system that is being sliced into finer, more granular domains.

The term microservice has grown very popular recently. Microservices are an architectural style, where a system is being sliced along business domains into fine-grained, independent parts.

In this context, a microservice can be a small vertical, as well as a service in a distributed computing architecture. The difference to traditional approaches lies in the size of the application: a microservice is supposed to implement just a few features from a particular domain, and should be completely understandable by a single developer.

A micro service is typically small enough, such that multiple microservices could be run on a single server. We have had good experience with “Fat JARs”, which can be easily executed via java –jar <file> and, if needed, can start an embedded Jetty or a similar server.

In order to simplify the deployment and the operations of the different microservices on a single server, each server runs in its own Docker container.

REST and microservices are a good combination, and they are suitable for building larger systems. A microservice could be responsible for a REST resource. The problem of service-discovery can be solved (at least partially) via hypermedia. Media types are helpful in situations that involve versioning of the interfaces, and the independence of service deployments.

Altogether, microservices have a number of good properties, some of which are:

- The development in a microservice architecture is fun: every few weeks or months, you get to work on a new development project instead of working on an oversized old archaic system

- Due to their small sizes, microservices require less boiler plate code and no heavyweight frameworks

- They can be deployed completely independently from one another. The establishment of continuous delivery or continuous deployment becomes much easier

- The architecture is able to support the development process in several independent teams

- It is possible to choose the most appropriate software language for each service respectively. One can try out a new language or a new framework without introducing major risks into the project. However, it is equally important not to get carried away by this newly emerged freedom

- Since they are so small, they can be replaced with reasonable costs by a new development project

- The scalability of the system is considerably better than in a monolithic architecture, since every service can be scaled independently from the others

Microservices comply with the principles of agile development. A new feature that does not fully satisfy the customers cannot only be created in a fast way, but it can be also destroyed as quickly.

Macro- und Micro-Architecture

The term software architecture traditionally implies the architecture of a single program. In vertical or microservice style architecture, the definitions like “Architecture is the decisions that you wish you could get right early in a project” is hardly relevant anymore. What part is hard to change in microservice style architecture? The answer is not the inner components of an application anymore. The difficult things to change are some of the decisions that have been made about the microservices, for example, the ways they are integrated into the system, or the communication protocols between the involved applications and etc.

Thus, we at otto.de are drawing a difference between a micro-architecture of an application and the macro-architecture of the system. The micro-architecture is all about the internals of a vertical or a microservice, and is left completely in the hands of its respective team.

However, it is worth it to draw some guidelines for the macro-architecture, which can be defined by the interactions between the services. At otto.de, they are the following:

- Vertical decomposition: The system is cut into multiple verticals that belong entirely to a specific team. Communication between the verticals has to be done in the background, not during the execution of particular user requests.

- RESTful architecture: The communication and integration between the different services takes place exclusively via REST.

- Shared nothing architecture: There is no shared mutable state through which the services exchange information or share data. There are no HTTP sessions, no central data storage, as well as no shared code. However, multiple instances of a service may share a database.

- Data governance: For each data point, there is a single system in charge, a single “Truth”. Other systems have read-only access to the data provider via a REST API, and copy the required data to their own storage

Our architecture has begun going through a similar development cycle like a lot of other architectures out there. At the moment, we are standardizing the manner in which the microservices will be used at OTTO.

Integration

So far, I have elaborated a lot on the extent to which a system can be divided. However, the user is ultimately at the heart of all our development, and we want our software to be consistent, feeling all of a piece.

Therefore, the question becomes: in which way can we integrate a distributed system, so that the customers do not realize the distributed nature of our architecture?

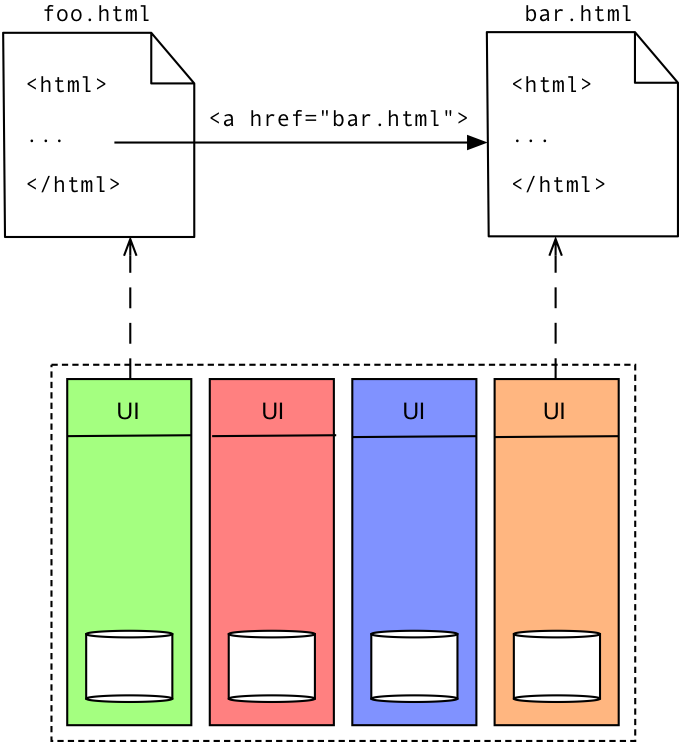

Hyperlinks

The easiest kind of web-frontend integration of services is the use of hyperlinks.

Services are responsible for different pages, and the navigation takes place via the links between these pages.

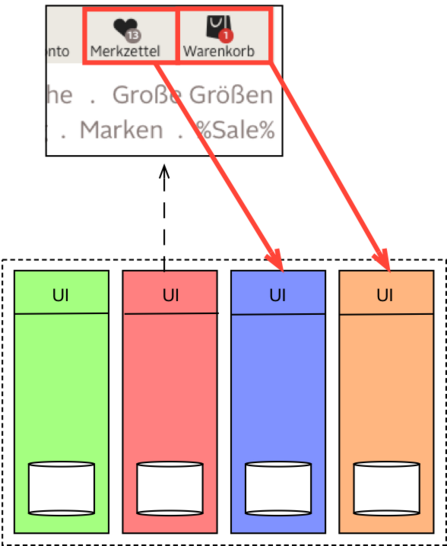

AJAX

The use of AJAX is also strikingly obvious for the purposes of reloading parts of the website via Javascript and integrating them on a particular page.

Ajax is a suitable technology for less important or not visible areas of the site, which allows us to assemble pages from the different services.

The dependencies between the involved services are quite small. They mostly need to keep an agreement between each other on the used URLs and media types.

Asset Server

Of course, the different pages of the system have to display all the images in a consistent way. In addition, the distributed services need to agree on their respective Javascript libraries and their versions.

Static “Assets” like CSS, Javascript and images are therefore delivered via a central Asset-Server in our system.

The deployment and the version management of these shared resources in a vertically sliced system is a whole different topic, which could become its own separate article. Without going into too much detail, the fact that the deployment of services needs to be independent while their assets need to be shared creates certain challenges of its own.

Edge-Side Includes

One of less known methods to integrate components of the site that come from different services is called Server- or Edge-Side Includes (SSI or ESI). It does not matter if we are dealing with Varnish, Squid, Apache or Nginx – the major Web-Server or Reverse Proxies support these includes.

The technique is easy: a service inserts an include statement with a URL in the response, and the URL is then resolved by the web server or reverse proxy. The proxy follows the URL, receives a response, and inserts the body of the response into the page instead of the include.

In our shop, for example, every page includes the navigation from the Search & Navigation (SAN) service in the following way:

<html> ... <esi:include src=“/san/...“ /> ... </html>

The reverse proxy (in our case Varnish) parses the page and resolves the URL of the include statements on that page. SAN then supplies the HTML-Fragment

...

The Varnish proxy replaces the include within the HTML fragment and delivers the page to the user

<html> ......

html>

In this manner, the pages are assembled from the fragments of different services in a way that is completely invisible to the user

Data Replication

The above-mentioned techniques prepare us for the Frontend-Integration. In order for services to successfully fulfill their requirements, some further problems need to be solved: services needing common data, while they must not share a database between each other. In our e-commerce shop, for example, several services need to process product data in a particular way.

A solution is the replication of data. All services, that, for example, need product data, regularly ask the responsible verticalProductfor the necessary data, such that any changes in data can be quickly detected.

We are also not using any message queues that “push” data to their clients. Instead, services “poll” an Atom Feed whenever they need data updates and are able to process them.

In the end, we need to be able to deal with temporary inconsistencies – something that can, in any case, only be avoided at the cost of the availability of the services in a distributed system like ours.

No Remote Service Calls

Theoretically, we could avoid the replication of data in some cases, so that our services can synchronously (meaning, being inside the duration limits of a single request) access other services when needed. A shopping basket could also live without the redundant saving of the Product data and instead it could directly ask the product vertical for data when the shopping basket is presented on the page.

We avoid this for the following reasons:

- The testability suffers, when you are dependent on a different system for major features

- A slower service can affect the availability of the entire shop via a snowball effect

- The scalability of the system is limited

- Independent deployments of services are difficult

We have had great experience so far with the separation of verticals. It definitely helps, at least during the earlier stages, to learn to stick to a strict separation, Using vertical decomposition, services can be developed, tested and shipped independently of one another.

Lessons Learned

After three years of working on a system designed in the way outlined above, we have had, all in all, a very good experience. The biggest compliment for a concept is, of course, when someone else finds it interesting, or decides to copy it.

Looking back, if I were to do something differently, it would be to break up the monolith into even smaller pieces earlier in the process. The nearest future of otto.de definitely belongs to microservices in a vertical-style Architecture.

Many Thanks to...

Anastasia for translating this text

Informatik-Aktuell.de for giving permission to publish the english translation of the original article.

44Comments

- g2-8995997ddd66f90460b037839b8a0bdc01.10.2015 09:23 Clock

The fact that you disallow code sharing unless the code is open source seems like a crazy anti pattern. You could build micro services in a shared repo, use proper testing and get most of the benefits here without re inventing the wheel multiple times. Twitter, Google and Facebook go in this direction. ESI is an interesting approach but including a snippet of html from another team is scary, especially if it depends on the common JavaScript that isn't integrated together during the build.

- 02.10.2015 17:37 Clock

For the ui separation that is also called "losa", I read that first in a presentation from the architect of Gilt.

I'm trying to achieve the same ui-reuse than you are with Edge Side Include. But with webcomponents who allow html imports.

I like your quote about domain driven in your last reply. In fact I'm using the term of " Domain service" to explain how we should split our services.

My main concern is about the API compatibilities/soa governance. Do you have some tips for that. How can you ensure that all services are not breaking another and how do you manage a breaking change into a service ? - 01.10.2015 17:50 Clock

When you split to verticals of verticals do you allow code sharing between those internal components?

- 08.10.2015 15:54 Clock

[…] On Monoliths and Microservices […]

- Guido Steinacker01.10.2015 17:57 Clock

We really try to avoid this beside of our open source libraries. There are only very few exceptions - and typically, we could and should open-source these exceptions, too.

If you are able to vertically decompose your system, every verticals is a bounded context (=>Domain Driven Design). - 01.10.2015 18:58 Clock

[…] English translation is now available here: http://dev.otto.de/2015/09/30/on-monoliths-and-microservices/ […]

- 02.10.2015 10:30 Clock

[…] When we began the development of our new Online Shop otto.de, we chose a distributed, vertical-style architecture at an early stage of the process. Our experience with our previous system showed us... […]

- 02.10.2015 12:52 Clock

Totally agree with you here... We also started out with sharing code between different microservices, but decided to lose that. It was kind of holding us back to make changes to the microservices. If not all of your code is within your microservice, you end up with a smaller distributed monolith and people are hesitant to change code in for instance such a shared piece of code. You never know what you might break...

- frastel13.10.2015 10:36 Clock

Thanks for this great article. It provides a very good overview about why bigger companies should move to microservices these days. At Chefkoch.de we are currently in the migration phase towards microservices too and facing different problems. Maybe we could have a little discussion about those problems as parts of them are already mentioned in your article.

1. With a microservice architecture the product teams have the possibility to develop and deploy as isolated as possible. Teams have their own development process, their own build and deploy pipelines. They manage their own databases, data caches etc. But what about other bottlenecks in the infrastructure? We recognized two bottlenecks still existing in our infrastructure: the router which has to know all services even with SkyDNS or other service discovery functionality and the reverse proxy.

1.1 We currently have one scaled but "monolithic" reverse proxy which is responsible for the HTTP caching of all microservices and the still existing legacy monolith. We currently try to split the reverse proxy functionality and move HTTP caching towards the specific services. How do you combine your services with reverse proxies?

1.2 The router has to know every service as every incoming request has to be routed to the according service. New services have to registered there. This is no big deal but it feels a little bit strange and scary in a microservice architecture. Every isolated team has to manage und modify this central component in the infrastructure. How do you handle those registrations of new microservices to your router?

2. You already explained that the verticals combine UI and business logic but the microservices contain only business logic and provide a REST interface for it. How do product teams implement the UI in your case then? Is there a central UI layer which communicates with the different services? Or does every product team also has its own isolated UI services? Currently all our microservices provide a UI besides the REST interface and we have a contract about the allowed and provided javascript functionality and so on. However we struggle with this way. Placing the UI outside or inside of microservices is imho an open question. Some developers prefer moving the UI inside the microservice and others refuse this behavior. I would really love to see an additional article about how you handle the UI if this couldn't be answered in short in the comment area. - 02.10.2015 15:39 Clock

I am planning to go with this kind of design for one client, but I was leaning toward using a message queue to push relevant data. i.e. if someone requests to launch a long-running job from the web front-end, it would send the relevant data into an AMQP queue that would be monitored by the services that run said jobs. Could you expand on why you avoided the message queue in favor of polling?

- 13.10.2015 09:17 Clock

"Pushing data IMHO binds services more than polling data." I have a question about this point as I don't get it: The polling mechanism needs to poll from somewhere. This mechanism has to be coupled with this knowledge (where is the endpoint located?) and services are bound. So I don't get this point here.

Talking about pushing: Pushing data could be implemented in different ways. When one publishes the complete document of some updated data e.g. a product in the message you have no coupling at all. The consumer service just receives the documents and works with it. It does not need to know where the message is published from originally. Binding of services is avoided here. For testing a consuming microservice you don't have to implement a MQ in the first step. The source of the incoming document could be completely mocked, so infrastructure overhead could be minimized. This doesn't apply to integration steps. At this testing level you have to face this overhead, that's true. - Guido Steinacker01.10.2015 09:55 Clock

In the beginning, I was thinking the same way and in fact we started with some 'common' libraries shared between verticals. After some months we realized, that it's not worth the trouble to sync the development of common code between teams. If you cut you verticals and microservices by business domains, you quickly realize, that the same thing ('product' for example) differs between the services. Sharing code would have the effect that things are leaking from one service to another.

One advantage of reinventing the wheel is that, after some time, you get better wheels. More important: the teams are more independent and below the line more efficient.

The stuff that is really worth sharing can be open sourced, leading to higher quality. Today I am completely convinced that this approach is better than trying to apply DRY across independent services. At least in our situation, having a vertically decomposed system :-)

Somewhere you have to integrate. Compared to other solutions, edge side integration is easy from the perspective of changing API formats (HTML), introduces failure units to improve fault tolerance, and so on. But yes: Javascript and CSS is difficult to handle, that's why we introduced an 'Asset' Server. And, of course, integration tests. - 02.10.2015 17:38 Clock

For the ui separation that is also called “losa”, I read that first in a presentation from the architect of Gilt.

I’m trying to achieve the same ui-reuse than you are with Edge Side Include. But with webcomponents who allow html imports.

I like your quote about domain driven in your last reply. In fact I’m using the term of ” Domain service” to explain how we should split our services.

My main concern is about the API compatibilities/soa governance. Do you have some tips for that. How can you ensure that all services are not breaking another and how do you manage a breaking change into a service ? - joel stewart04.10.2015 16:26 Clock

Do you perform any BI on data sourced in your microservices? Do you only use REST to populate your BI database?

Would you ever consider using a common event logging system for monitoring your services? - Oldrich Kepka05.10.2015 09:00 Clock

We ended up with very similar architecture in HP Propel. We also us polling and no blocking calls to our APIs.

Our use of polling has several advantages:

1) Simpler deployment (you don't need queueing middleware).

2) Your API call are not depended on whether the middleware is running.

3) Higher service independence compared to queues or direct service calls.

The independence of very powerful property of polling which would need more elaboration. But in short a service doesn't need to know who is polling data and how many of these clients is out there. - Guido Steinacker06.10.2015 21:08 Clock

Of course we also have BI systems. One of the verticals ('Tracking') is gathering and processing the tracking events. Data is delivered into different data sinks, one of it being a BI where Hadoop and lots of other tools are used to extract all kind of information. This system is separate from the shopping platform - and I do not know too much about it's architecture.

Because of logging: we are using Splunk + the ELK (ElasticSearch+Logstash+Kibana) stack to gather logs from all the different applications. But this is not special for Microservices - aggregating logs is a common problem in all kind of distributed systems. - Guido Steinacker06.10.2015 21:23 Clock

API compatibility is relatively easy to achieve in RESTful systems: Representations are defined using media types. A client is accepting one or possibly muliple media types. Servers must make sure that services can still serve "deprecated" media types, until all the clients are migrated to the new ones.

In order to make APIs more robust, we expect that every service is able to handle extensions to a representations at any point in the document (mostly XML or JSON).

CDC tests (consumer-driven contract tests) are used to ensure contracts between services early in the build pipelines: If team A is using a services of team B they write a test that is checking for team A's expectations about the behavior of the services. The test is added to Team B's deployment pipelines. Every time, team B is breaking this contract, the test will fail - and the change will not go live until either the test or the breaking change is fixed. - Guido Steinacker06.10.2015 21:28 Clock

I absolutely agree...

- Guido Steinacker06.10.2015 22:22 Clock

See Oldrich Kepka's comment... In addition, I would mention the testability of services: without extra infrastructure components like a MQ servers, it is generally easier to test a service. Solvable problem, but still... it's easier.

In our case, AMQP would also possibly be not scalable enough - but this depends on your non-functional requirements. I would never say that messages queues are not a possible solution for distributing data. But letting clients decide about the time when they are able to process more data, is more flexible in terms of failure tolerance, different requirements regarding consistency, and so on.

Pushing data IMHO binds services more than polling data. - 13.10.2015 13:57 Clock

About ESI inclusion, check out this open source project : esigate.org

- 15.10.2015 16:36 Clock

[…] is Otto.de, about which I dida talk together with their tech lead (and which you can read about in this nice write-up by one of their lead architects). But there are quite a few others as well, and I remain convinced it’s a good idea to start […]

- Guido Steinacker15.10.2015 18:15 Clock

There is a great article from Uwe Friedrichsen about the reasons why (and when) re-use is inefficient. If you wonder how educated developers could knowingly ignore DRY priciples in the context of microservices (no shared code), read https://blog.codecentric.de/jobs/en/2015/10/the-broken-promise-of-re-use/

- Christian Stamm15.10.2015 19:13 Clock

Additionally to what Guido said, I'd like to recommend this excellent if a little long article on re-use:

https://blog.codecentric.de/jobs/en/2015/10/the-broken-promise-of-re-use/

"Strive for replaceability, not re-use. It will lead you the right way"

Everything you find on https://github.com/otto-de/ has a re-use factor of at least two. Often much higher. - 04.11.2015 21:56 Clock

Thanks for the article, it really confirmed an experience I had in a similar project.

I got one question regarding the "Developer-Experience" in your architecture. How do you ensure that its a "nice, easy and good" way for the developers to work & integrate with other slices/services?

This is at least an area in which a true monolith could excel - you got the whole application in your IDE (even if might slow it down) and you just hit "run" and everything "works" (I'm not pro monolith, and I'm against big projects/solutions in IDE, but at least that seems somehow reasonable...)

Do you use e.g. nightly builds/deployments for (not actively) developed services?

Do you try to run everything on one/the local machine?

Do you try to write each service completely on its own? If so how do you test integration (e.g. with ESI?) Only on deployment?

How do you include the assets on a dev machine? Use the one from the last nightly build/deployment? What if I need to change something there?

These are some points which bother me/were I don't have a nice solution or I'm not sure its a good approach

Can you share some experiences? - Guido Steinacker05.11.2015 21:07 Clock

Hi Christian,

Developers do not need to run fully integrated services; every service is loosely coupled and it is possible to run it locally in your IDE. Some services do not serve HTML pages, but only fragments (for example some product recommendations that are integrated with pages from other verticals). In this case, we are using optional request parameters that tell the vertical to render not only a fragment, but a page with CSS/JS plus the fragment, so developers are able to actually use the fragment.

We are continuously integrating all changes in CI stage, so every developer is able to check the integration with other systems a few minutes after a git push. Of course, we also have automatic regression tests, that check whether or not fragments on a page are working as expected.

All Tests can be executed not only in the different stages, but also locally on the developer's machine. Before changes are pushed, all tests are executed. This requires tests to run really quick - in our case the biggest application has some 5000 tests which are running in less than 5 minutes. Performance of tests is critical, because if they are too slow, developers would possibly push without running the tests - and other developers would become problems.

We do not have nightly builds, but continuous builds using a build/deployment pipeline for every single service. Every pushed code change is triggering the pipeline; the code is compiled, tested, packaged, deployed, integration tests are executed, then the package is deployed to the next stage - and so on. Services that are not actively developed are rare, at least in my team, there is no service that is not changed at least every few days. If you have such kind of services, I could imagine that it is a good practice to deploy them every few days so you can make sure that the deployment itself is still working.

Assets are served using a separate Asset server. While a push may include changes to assets (triggering a deployment of the assets to the asset server), most of the time we are toggling changes anyway because we want to separate deployments from customer-facing changes. However, the handling of assets is possibly the most difficult part in our architecture, because these are the parts where all verticals have to integrate.

In order to automatically check for breaking changes in the fronted, we are using not only JS + Selenium tests, but also tools like Lineup (https://github.com/otto-de/lineup). - Michael28.10.2015 11:04 Clock

Thank you Guido and team for this great article, knowledge sharing and insights. Really appreciate it. I was just wondering whether during the early design phases or at later stages you were considering Event-Sourcing/ CQRS patterns for some systems/ the macro-architecture? If so, why did you chose not to go the CQRS route, then?

- 29.10.2015 10:23 Clock

[…] Otto Development-Blog über Monolithen und Microservices […]

- 29.10.2015 12:03 Clock

On a side note and unrelated to the architectural discussion, the one-liner summary of Conway's law seems to misrepresent the core idea behind the law:

Conway’s Law: The development starts with a team, and in the beginning, the application is very understandable: at first, you have a “need” for just one application.

I would rephrase this and try to put it more in line with the corresponding Wikipedia page https://en.wikipedia.org/wiki/Conway%27s_law. Here is my attempt on it:

Conway's law: Team structure within large organizations is only effective, if it mirrors "real-people communication" behavior, thus leading to small interdisciplinary teams with short internal communication routes. - fritzduchardt29.10.2015 14:31 Clock

On a more architectural note, one question regarding code sharing (and this might be java-centric): when passing DTOs between Microservices in JSON or XML format, the payload is commonly automatically generated from beans. Those beans also have to be present on consumer side for deserialization. With your "share only open-source"-code methodology, how would you make those DTOs are available for all interested parties?

- Guido Steinacker31.10.2015 02:30 Clock

Hi Frastel,

sorry for the delay, I had some days off...

For part 1: we have a 'platform engineering' team that is providing self-services for the development teams (beside other things like, for example, 24/7 operations of the platform). One of these self services is the possibility to create/deploy new microservices, including the load balancing and routing stuff. This is accomplished using Varnish proxy servers and a brand new and shiny Mesos cluster management. This way, no dev team has to configure central infrastructure components, instead of this they are using services provided by the platform engineering team.

I can not tell about the details of the proxy etc, because it's not my business. At least we do not have real bottlenecks in this area; Varnish is really fast and using three or more Varnish servers in parallel, this area is not a real problem right now. If you have more questions, please come back to me, I should be able to find someone with more knowledge in this area.

Because of 1.1: why do you think that your reverse proxy is a monolith? If you only use it as a reverse proxy (+router+load balancer) without misusing it for other stuff - it's simply handling HTTP requests, that's IMHO perfectly fine. Moving caching down might be ok (maybe good...), but I would prefer cascading reverse proxies instead of server-side caching. The extra hop should not be a big deal. Currently, we only have one layer of "page assembly" proxies, but I could imagine, that your approach is better/easier to manage. Please tell me about your findings!

Part 2: We do not have a central UI. For every page there is one vertical responsible for the main content; this vertical is including parts of other verticals using ESI or AJAX into the page. Javascript + CSS + Images are coming from an so called Asset Server. If you want to serve UI from the verticals/microservices, you have to solve the problem that there are common parts (JS, CSS) in the UI. Asset Servers are difficult, but it's far easier than having a UI team that has to collaborate on a daily basis with multiple "backend" teams. Such structures will hardly scale from an organizational perspective and it will be hard to deploy services independently, if you have shared UI services.

I would imagine that those developers who are resistent to be full-stack developers will favor separation of UI and backend services, while developers that feel comfortable in both worlds would prefer microservices-with-UI aka verticals. Yet another variation of Conway's Law. But think about the consequences of separating UI from 'services': Every development of a customer-facing feature would require two teams to collaborate. This is a nightmare. Having multiple product teams, each being responsible from UI down to the DB, all of these teams are able to work (and deploy) independently from each other 90% of the time.

In order to convince the resistent developers: maybe you should try out pair programming. It's great :-) - Guido Steinacker31.10.2015 02:52 Clock

Interesting question. Yes, I was thinking about similar architectures quite early. For example I had an eye on http://prevayler.org, following similar approaches. Yes, it could have been helpful in some areas; no question. But...

In the beginning (2010 - earlier than the CQRS blog post of Martin Fowler, btw), there were lot's of other questions like, for example: do we know at all about how to solve our problems in better way than all these companies offering off-the-shelf software?

A little later we decided to vertically decompose the system and to distinguish macro from micro architecture. Using CQRS+event sourcing would have been a decision in the micro architecture of a vertical - in our understanding nothing, where we should talk about; this is up to the teams. We definitely did not want to have ivory tower software-architects telling other developers how to develop their software.

Whether or not CQRS+ES would have been better or not depends on the exact requirements of the different verticals: today roundabout twelve completely different verticals, consisting of maybe 50 applications.

I'm very sure, that we will see CQRS+ES µServices soon because it's a really interesting approach. In the beginning, we had other problems - but right now we have an architecture where it's easy to try out new paradigms (and languages and databases and...) without any risk. - Guido Steinacker31.10.2015 02:57 Clock

It's not only not effective - according to Conway, it's hardly possible. On the other side, there is something like "Inverted Conway's Law": if you choose an architecture, this will certainly change the communicational structures of your organization. That's what we have observed when we established our vertical style architecture.

- Guido Steinacker31.10.2015 03:06 Clock

Sharing DTOs and similar kind of code is exactly what we do not want to have. It's simply not worth sharing. BTW I do not like to generate JSON or XML from beans because it's too easy to break contracts to other services by doing code refactorings using your favorite IDE. The media types are the contract between services and generating or parsing a JSON or XML document is too easy - it's not worth the trouble of code sharing.

This is mostly because our verticals are implementations of a bounded context... A shopping cart, for example, needs products - but it does not need all the details of a product but only an extract. Same is true for almost all the different bounded contexts of an commerce system... - 17.11.2015 14:04 Clock

Thank you so much for sharing your experience, that was an awesome reading. Can you share more details about solution to “poll” an Atom Feed. Do you have cases when 1 consumer is not able to serve all the data to poll so that you need to scale 'polling' consumers? How do you distribute load to this consumers so that consumers do not poll and process the same data?

- Guido Steinacker20.11.2015 15:24 Clock

In case of ATOM feeds, it is possible to use HTTP caching (using a reverse proxy) for different pages of the feed. This way you should be able to serve feeds to any number of consumers.

If you prefer Kafka, lots of clients should also not be a problem: Kafka basically is a ring buffer where clients know about the current read position in the ring buffer (possibly by using Zookeeper to store this position). At least in our situation scalability in these two scenarios is not a problem. - 24.03.2016 02:07 Clock

[…] interested in the ‘how’ instead of the ‘why’, please have a look into an earlier article on monoliths and microservices […]

- 20.03.2016 18:51 Clock

[…] When we started ‘Lhotse’, the project to replace the old, monolithic e-commerce platform of otto.de a few years ago, we chose self-contained systems (SCS) to implement the new shop: Instead of developing a single big monolithic application, we chose to vertically decompose the system by business domains (’search’, ’navigation’, ‘order’, …) into several mostly loosely coupled applications. Each application having it’s own UI, database, redundant data and so on. If you are more interested in the ‘how’ instead of the ‘why’, please have a look into an earlier article on monoliths and microservices. […]

- 21.04.2016 21:51 Clock

[…] Das IT Team von Otto ist in diesem Bereich schon Champions League und hat mit dem “On Monoliths and Microservices” einen Pflichtartikel in diesem Bereich produziert. Über dieses Thema hat Guido von Otto […]

- 24.03.2016 00:53 Clock

[…] When we started ‘Lhotse’, the project to replace the old, monolithic e-commerce platform of otto.de a few years ago, we chose self-contained systems (SCS) to implement the new shop: Instead of developing a single big monolithic application, we chose to vertically decompose the system by business domains (’search’, ’navigation’, ‘order’, …) into several mostly loosely coupled applications. Each application having it’s own UI, database, redundant data and so on. If you are more interested in the ‘how’ instead of the ‘why’, please have a look into an earlier article on monoliths and microservices. […]

- 21.04.2016 21:41 Clock

[…] weiterhelfen. Das IT Team von Otto ist in diesem Bereich schon Champions League und hat mit dem “On Monoliths and Microservices” einen Pflichtartikel in diesem Bereich produziert. Über dieses Thema hat Guido von Otto auch […]

- 03.01.2016 22:22 Clock

"Sharing DTOs and similar kind of code is exactly what we do not want to have." - agree with that. Very hard topic to discuss with many java developers... I'm very happy to be not the only one ;)

- 30.11.2016 19:17 Clock

[…] Testmanagementwerkzeuge setze ich ein und wozu?“, „Was macht der Trend der Microservices mit uns?“ und „Wie gelingt ein Change hin zu agilen […]

- 04.04.2018 08:20 Clock

[…] ersetzt. Das Mammutprojekt wurde im Oktober 2013 erfolgreich gelaunched und bedeutete neben einer modernen Architektur auch den Abschied von unzähligen „historisch gewachsenen“ […]

- 08.07.2018 13:59 Clock

[…] https://dev.otto.de/2015/09/30/on-monoliths-and-microservices/ […]

Written by

Similar Articles

- Team JarvisDecember 17, 2025

Learning to Rank is Rocket Science: How Clojure accelerates Our Machine Learning with Deep Neural Networks

015Learn how OTTO uses 🖥️ Clojure to build robust machine learning pipelines for learning to rank 🚀 and improves the search experience.DevelopmentMachine learning - Team JarvisNovember 04, 2025

Learning to Rank – To the Moon and Back(-propagation): Deep Neural Networks That Learn to Rank What You Love

015Learn how OTTO optimizes search relevance using Learning to Rank – and the role Deep Learning plays for our Data Science team.Development