Learning to Rank is Rocket Science: How Clojure accelerates Our Machine Learning with Deep Neural Networks

In This Article

In e-commerce search, decisions made in seconds determine whether customers stay or leave. At OTTO, we rely on learning to rank: instead of fixed rules, our models learn from millions of real interactions which products are truly relevant.

In this article, we explain why Clojure is crucial to our production stack in the field of machine learning — and how Clojure and Polylith help us run complex machine learning pipelines in a stable, efficient, and maintainable way.

From Deep Space 1 to Learning to Rank at OTTO

For years, Gradient Boosted Decision Trees (GBDTs) were the undisputed champions when it came to producing precise rankings for our customers. They are reliable, interpretable, and remarkably capable. Yet with our Deep Neural Network, we were able to outperform our champion.

Our goal: take our customers’ search experience to the next level, efficiently and cost-effectively. To do this, we use Clojure, a programming language that makes it easy for developers to maintain and extend code. A language that’s fun to use.

What is Clojure? A programming language based on Lisp. Lisp (short for “List Processor”) is a language developed in 1958 by John McCarthy at MIT. It is known for its unique syntax, powerful metaprogramming capabilities, and strong influence on the development of other programming languages.

Lisp has already been used in space. More precisely, Lisp was an important part of the software used on Deep Space 1 (DS1). The system for autonomous control of the spacecraft (Remote Agent) was written in Lisp.

A remarkable aspect of using Lisp on DS1 was the ability to run a Read–Eval–Print Loop (REPL) on the spacecraft. This proved extremely valuable when debugging issues in space, as engineers were able to inspect and adjust code on the spacecraft, solving problems “in flight.”

Python is the first-class citizen for machine learning. Nevertheless, we deliberately chose the Lisp dialect Clojure to implement our Deep Neural Network–based Learning to Rank service. That may sound unusual at first. But Lisp can operate complex, fault-tolerant systems in one of the most demanding environments. This was already demonstrated on Deep Space 1, which makes it an ideal tool for AI and autonomous systems. That’s more than enough reason for us to explore it further.

What makes Clojure special?

Clojure is a dynamic, functional programming language that operates on the Java Virtual Machine (JVM). It was designed to combine the strengths of functional programming with the reliability and rich ecosystem of the JVM.

The language is based on Lisp, one of the most important languages in the history of computer science. Its innovative ideas and concepts have profoundly shaped many other languages and continue to do so today. Showing that good ideas are timeless - still relevant and valuable decades later.

Released in 2007 by its creator, Rich Hickey, Clojure was developed with an emphasis on simplicity, clarity, and pragmatism. Hickey highlighted features like immutability, higher-order functions, and metaprogramming. All while keeping the language practical and approachable.

Beyond its elegance, Clojure offers many advantages:

• Concurrency: It was designed from the ground up for parallelism and concurrency, allowing it to take full advantage of modern hardware.

• Immutability: By default, Clojure’s data structures are immutable, keeping application state clear and traceable.

• JVM Interop: Running on the JVM gives Clojure direct access to the huge and mature ecosystem of Java libraries. This means developers can utilize proven solutions for things like database access, networking, and more.

• REPL-driven development: Clojure's REPL (Read-Eval-Print Loop) offers an interactive, incremental development style. This allows engineers to write, test, and debug code on a running application, which provides fast feedback and makes prototyping more efficient.

• Functional programming: The foundation of Clojure is its use of pure functions, computations that operate without side effects. This improves testability, enables parallelization, and leads to better software design.

Why Clojure for Machine Learning?

In the world of Machine Learning (ML), Python and R are the leading languages. They offer an easy entry point, numerous tools, and extensive documentation, which simplifies exploratory and prototyping work. However, in our business environment, we must focus more on operational stability and the cost efficiency of the solution. That is why we rely on Clojure and benefit from the following advantages:

• (Cost-)Efficient Data Pipelines and Modular Feature Engineering:

Transformations in our data pipelines are inherently functional. Large datasets must be manipulated, filtered, and aggregated to obtain new data. Most operations are independent and can be processed as a stream. This leads to better parallelization, improved testability, and reduced memory usage.

• Operational Resilience: Running on the JVM means we gain excellent performance in terms of runtime speed and memory footprint. Its comprehensive suite of application monitoring tools allows us to accurately diagnose and eliminate both performance and memory-related issues.

How we operate our Neural Network

Our architecture is built around a PostgreSQL database containing all queries and its products. At serving time, Clojure microservices access this data to rank incoming search results (Retrievals) based on the relevance scores.

The scores are generated by predictions from our Deep Neural Network. It operates on relevance signals, interaction data of our customers, as well as the product data. Our machine learning pipelines are built as Clojure jobs and run on AWS. They prepare data for model training and prediction.

From Data Blocks to Data Streams: Streaming Pipelines with core.async

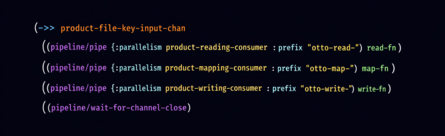

Inspired by UNIX pipes as well, our jobs treat data as a stream. Individual items flow through a series of processing steps like reading, filtering, and mapping. We use core.async channels to connect these steps, creating a continuous data flow.

The ->> threading macro connects functions in a clean pipeline, improving code clarity and data flow traceability.

Schema-based Data Storage with Protobuf

Our jobs process files in Protobuf format compressed with lz4 or zstandard, which results in the following advantages:

• Self-documenting data: Protobuf is a schema-based format and therefore documents the content of each record. When requirements change, we must update the schema and ensure the description remains up to date.

• Built-in validation: Schemas enforce correct data types and mandatory fields. Jobs validate these constraints automatically when processing files.

• Higher efficiency: By using the binary format, our jobs spend much less time on string processing and need significantly less time for reading and writing files.

• Streamable format: Protobuf's streaming capability lets us read and write files record-by-record, keeping memory usage low even with large datasets.

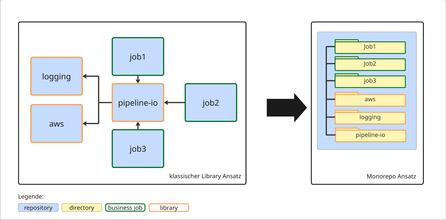

Easy Code Sharing with Polylith

We used to share code through libraries, resulting in complex dependency chains. For this project, we wanted to simplify dependency management significantly. So we decided to give the monorepo approach a try and use Polylith to organize the project and manage dependencies.

The benefits we have realized:

• Directories instead of separate projects: We organize libraries, jobs, and services as "building blocks" in separate directories within a single repository. Dependencies are just references to building block directories. This eliminates cross-repository management overhead.

• Transparent dependencies: Because applications always work with current shared code, no library-style versioning is needed, which entirely eliminates the possibility of outdated dependencies. Furthermore, development tooling finds all code references automatically, allowing us to refactor safely and confidently.

• Safety during changes: When we change functionality, we run all tests in the monorepo and immediately validate all affected components.

• Automated CI/CD with GitHub Actions: Polylith automatically determines which projects have changed and require a rebuild. Once identified, a single pipeline runs all tests, creates artifacts, and deploys them to development and production environments.

Summary

True, we're not launching rockets here. But Lisp and Clojure's proven advantages remain relevant today. They power efficient machine learning pipelines, enable fast iteration cycles, and deliver predictable costs.

If you're pursuing a similar direction, these topics are worth exploring:

• Clojure, a data-centric, simple, and performant language

• Monorepo, for clear source code organization

• Protobuf, for documentation, validation, and efficient processing

And if you want to delve deeper into the basics of learning to rank, you will find the right introduction in our previous articles:

• Learning to Rank – To the Moon and Back (-propagation): Deep Neural Networks That Learn to Rank What You Love

• Part 1: Introduction in Learning to Rank for E-Commerce Search

• Part 2: Data Collection for Learning to Rank

The journey continues for us as well. 🚀 We are planning personalized rankings that need to be calculated in real time and can no longer be pre-calculated. Clojure provides the foundation to meet this challenge effectively.

Want to be part of the team?

0No comments yet.

Written by

Similar Articles

- Team JarvisNovember 04, 2025

Learning to Rank – To the Moon and Back(-propagation): Deep Neural Networks That Learn to Rank What You Love

016Learn how OTTO optimizes search relevance using Learning to Rank – and the role Deep Learning plays for our Data Science team.Development  MarcoJuly 30, 2025

MarcoJuly 30, 2025Open Source at OTTO: Transparency, Community & Innovation

05Find out how OTTO lives Open Source – for more transparency, developer satisfaction and digital sovereignty. Read more about our strategy now!Development