What is the article about?

In September 2023, ogGPT was launched as our internal, privacy-compliant AI assistant. Employees can use it to bring the benefits of generative artificial intelligence to their work and interact with uploaded documents. After almost a year with Retrieval Augmented Generation, we wanted to elevate the capabilities of ogGPT to a new level with the help of agent-based modelling. You can read about our reasons for doing so and the path we took in this article.

Challenges and solutions for dynamic model support

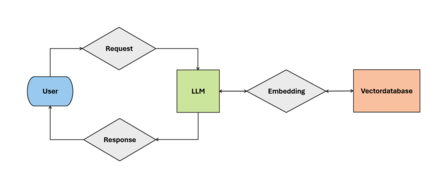

The feature allowing users to upload their own documents to ogGPT is almost as old as ogGPT itself. Although the name of the feature has changed over time, the underlying implementation has remained largely the same: when a user makes a request, Langchain and a large language model (LLM) are used to first rephrase the relevant parts of the query, then embed them with Azure OpenAI ada-v2, and finally compare them with the existing text chunks in the vector database. The most relevant parts of the document are then sent along with the user's query to an Azure OpenAI LLM, where a response is generated.

Over time, however, we noticed several weaknesses in this approach. For example, what happens if a user wants to retrieve information from their documents first and then compare it with general information? In such a case, the system would still search for documents relevant to the general query and pass them to the language model, even if they were highly irrelevant to that query.

Or, what should happen if the user wants the document to be summarized? Implementing such a summary isn't particularly complicated, but how should the model know when to summarize and when to search through all documents instead?

Ultimately, all these questions boil down to one core question: We currently have to decide for the model when to apply which functionality, whereas it would be better if the model could make these decisions for itself. Instead of dictating the model exactly what to do and when, we should support it. We should provide it with the tools it needs for its tasks.

How do agents work?

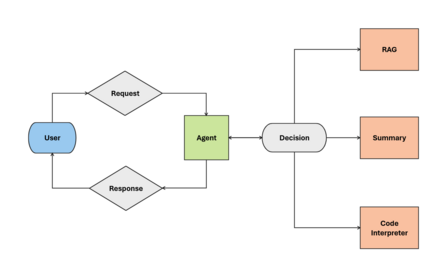

Fortunately, there is already a concept that can do just that: "agents" and, appropriately, "tools". The foundation for this has been laid by an innovation in the models of major LLM providers. "Function calling" allows models to invoke specific code snippets with their text output, which significantly extends their capabilities.

While this was theoretically possible before, in August 2024 OpenAI improved its models to make them more reliable. But what exactly are these "tools"? The concept is quite simple: as developers, we define a function that, for example, manages the search process for relevant documents in a database. To turn this function into a tool, we need to add appropriate names and descriptions so that our LLM knows what arguments to pass to the function and in what context it should be executed.

All of this is passed to the model, along with other possible tools and the system prompt. When the user makes a request, the model or agent, can decide whether it wants to execute one of the available tools. It can do this by generating and returning the function name and the required arguments in a predefined structure. We can then evaluate this output and execute the desired function on our server. The output of the function is then returned to the agent, which then generates a response for the user.

This iteration can also be repeated several times in a row, depending on how relevant the available functions are to the request and what the output of previous function calls was. Thus, the agent can also correct its own previous errors, for example by calling the function with different parameters.

Implementation in ogGPT

This kind of independence was exactly what our customGPTs were lacked. Therefore, we began transforming the previous RAG (Retrieval Augmented Generation) implementation into a tool, and introduced agent-based modeling to our users along with system prompts. While this may sound trivial at first, many test iterations took place in the background to adapt the model's behavior to our expectations.

A little later, we also added a tool that allows the agent to create a summary of a document. This led to completely new workflows that we hadn't anticipated before. For example, the agent combined its tools by first creating a summary and then using the more specific vector search.

On closer inspection, it turned out that in some cases, the summary wasn't specific enough. So the model tried to fill in the gaps. Even if the user's request wasn't clear enough, the summary was initially used to get an overview of the document, and then follow-up questions could be asked based on the content. ogGPT had indeed become much more independent.

We have also been able to address another weakness of LLMs: numbers and data. Because the models are based on the semantic representation of text, many models fail at complex calculations required for data analysis. This is where the "code interpreter" comes in: It allows the model to write its own code and then execute it in a kind of sandbox environment. It receives the code outputs back, which it can then use to generate the answer. This way we don't overwhelm the model with numerical input, but still enable it to interact with the data in a flexible way.

Again, the agent benefits from multiple iterations: Is the data structured differently than expected and the code produces an error? No problem, ogGPT recognizes the error, adjusts the code independently, and still generates the desired result without requiring me as the user to make a new request.

Current status and outlook

This independence opens up a vast number of potential applications and therefore requires a completely new approach to ensure the quality of responses. We have not yet exhausted all the possibilities and continue to be pleasantly surprised by the agent's behavior. At the same time, it presents us with new challenges in testing changes. Although we have improved our approach in this regard, it remains an iterative process that is becoming increasingly relevant in relation to the further development of LLMs.

Nevertheless, in the future, we want to add more tools to make our AI assistant even more capable, thereby helping our users better with their tasks. Possibilities include internet access, which would provide ogGPT with access to up-to-date information, or the integration of other internal data sources, allowing flexible expansion of knowledge.

But who knows: in the rapidly evolving world of artificial intelligence, no one can predict what possibilities the future will bring. We, and ogGPT, are certainly excited about it!

Want to be part of the team?

0No comments yet.

Written by

Similar Articles

Aemilia und SvenjaJanuary 15, 2026

Aemilia und SvenjaJanuary 15, 2026Data-Driven Team Development – How OTTO Leads Tech Teams to Success

02What makes tech teams truly effective? How we use data-driven team development to measure effectiveness. ➡️ Explore the blogpostData scienceWorking methods- Team JarvisDecember 17, 2025

Learning to Rank is Rocket Science: How Clojure accelerates Our Machine Learning with Deep Neural Networks

015Learn how OTTO uses 🖥️ Clojure to build robust machine learning pipelines for learning to rank 🚀 and improves the search experience.DevelopmentMachine learning