What is the article about?

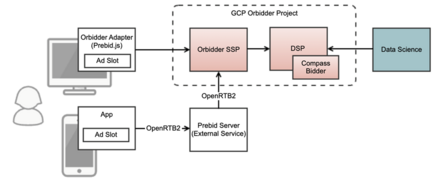

In collaboration with Online Marketing, Team Siggi at OTTO BI developed an in-house programmatic advertising solution in 2018. For this purpose, among other elements a separate SSP (Supply Side Platform) and DSP (Demand Side Platform) solution was developed, called Orbidder.

Our colleague Dr. Rainer Volk has already described the background to the SSP and DSP and outlined how these services work together in this blogpost (in German).

Orbidder has been live since 2019. It handles 1 to 1.2 billion bid requests daily and steers over 300 active marketing campaigns simultaneously via Data Science models running in the background.

At the beginning of 2022 it was decided that the solution initially set up with Sales to steer marketed advertising campaigns on external websites should now also be used to steer advertising on otto.de site areas. This generated new requirements for the system landscape and steering logic. In the blogpost that follows we'd like to share with you how we have implemented these new requirements.

The problem we tackled

Figure 1: Basic structure of the SSP/DSP

Connecting otto.de to the system created a number of requirements that couldn't be mapped with the setup we had been running to date.

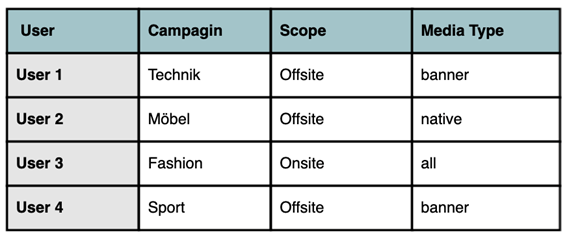

So far, for all known users ('known' means an Otto cookie is currently on their end terminal) every 2 hours we determined via a batch process which campaign could suit them well, on the basis of a range of features (click history, abandoned shopping carts, etc.). This user-specific assignment was then exported and logged in the DSP. However, this pre-calculation and the focus on one campaign per user triggered two technical issues – and there was an organisational 'sporting challenge' thrown into the mix too.

Figure 2: User assignment

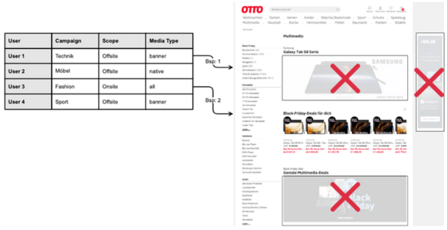

Problem 1 – No campaign for the current page

Was passiert nun, wenn ein Nutzer auf otto.de zum Beispiel auf eine Angebotsseite mit Tablets oder Smartphones kommt?

Figure 3: Problem 1

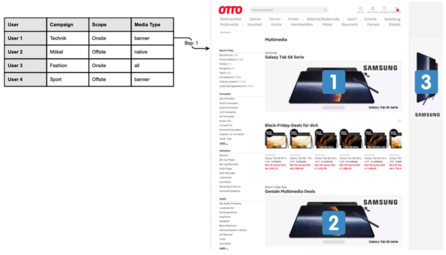

Problem 2 – Campaigns and advertising media per page, not per placement

In order to fix this problem, one needs to change the language model in a fundamental manner towards a stronger query-centricity. Traditionally, language models that underpin semantic product search have often been trained with product- and query-texts. However, since product-texts are usually much longer, this often does not leave not enough bandwidth in these models to learn all kind of different query variations.

We have alleviated this problem by training the model on queries only and have included all query texts with spelling mistakes. In addition, we have fundamentally altered the tokenization strategy and have used more and smaller tokens for the training of the language model so that it can learn the correct semantic despite variations in the spelling. This makes the model much more robust.

Figure 4: Problem 2

Usually, a bid request includes all placements/ad slots on a website. Although bids are now submitted individually for all placements, the previous assignment is based on batch processes. As a result, a user is always assigned to precisely one campaign for 2 hours. If 3 placements are available on a website and 2 placements are won, then the same campaign and thus the same advertising medium is displayed on both placements.

In our example, a Consumer Electronics campaign was calculated for User 1 which also has the right scope. This means that all advertising spaces on the webpage are attributed to the same campaign and this results in the display of similar advertising media on all placements.

Responsibilities distributed across multiple teams

On top of the two problems we mentioned above, there was another challenge – not so much a technical or professional issue as an organisational one. According to Wikipedia, 'Conway's Law' postulates that:

"Organizations are compelled to design systems that mirror their own communication structure."

Well, this 'law' is also reflected in our own product! The operation of the SSP/DSP infrastructure and the execution of the model results were carried out by one team, and the Data Science models by another team – maybe you can imagine what difficulties this might cause. The different programming languages alone (Data Science models in Python, DSP/SSP in GO) made algorithm mods and testing difficult.

Aiming at further development we therefore also needed to tackle the issue of product interfaces and whether it would be possible for both teams to develop more independently of each other.

Solution and tech stack

So how could we tackle these issues? First of all, through discussion. Both teams' Developers summarised the core requirements in a series of joint meetings, as follows:

• Calculation of user assignment per ad slot/placement, i.e. no longer just one campaign per user

• Consideration of the user's current environment (onsite, offsite, app, URL)

• Independence in Data Science development.

To implement these requirements successfully we needed to assign users to a campaign and placement much faster and more flexibly than before. And to be able to take environmental information into account, the calculation would have to be carried out in real time. So we clearly needed real-time assignment going forward.

With one problem solved we now faced implementation. It quickly became clear that we wouldn't be able to implement the new requirements with the existing implementation methodology. Here are just a few of the roadblocks:

• The bid function must not become so complicated that it prevents the CPM being calculated in the low latency range

• A lot of data have to be accessed at short notice, which would increase the access demand on our database around 1000x

• Data volume and data size (e.g. features) would grow massively

• Data Science teams would be severely limited if changes need to be made.

TensorFlow to the rescue

How do you manage to calculate the optimal bid level and campaign in real time? A range of differing factors such as budget, relevant campaigns and highly diverse user features need to be available in milliseconds and finally related to each other using a formula provided by the Data Scientists... and all this with a very low latency!

Since one of our goals was also to safeguard the independence of the Data Scientists, we wanted to avoid implementing a complex static function directly in a service. Reading and writing high data volumes from and to a database failed the speed test, and pre-calculating individual steps was in turn not flexible enough to deal with these problems.

Figure 5 - TensorFlow logo

After extensive consideration and discussion, the best option turned out to be a Machine Learning framework that allows a complete trained model, including the associated data, to be exported. This exported model would then need to be integrated into a service and called up via an interface.

This led to our approach of using TensorFlow and TensorFlow Serving, developed by Google to simplify precisely this type of use-case. TensorFlow is a framework for implementing Machine Learning and Artificial Intelligence tasks; with TensorFlow, Data Scientists can encapsulate models and data in an exportable format, thus maintaining control over the algorithms and modifying them independently without requiring further development effort in the SSP or DSP. TensorFlow Serving offers a simple, powerful way to implement the models operationally after training them. Essentially, the enriched bid requests can be sent to TensorFlow Serving via API and the response, consisting of the bid and the campaign, can be read.

Request / response

For the calculation, a major portion of the data and features were already implemented in the TensorFlow models, then exported as a 'saved model', making the models a chunky >15 GB. The models are fully loaded into Orbidder by the TensorFlow Serving model server. This allows for very fast model-code execution and response times in the 10 to 20 millisecond range.

For us to be able to calculate the bids and the campaigns, only the enriched bid requests are now missing. In addition to the user ID, these also include information on campaigns, context and budget. The TensorFlow Serving model server offers out-of-the-box interfaces for gRPC and REST, which then allow the model to be harnessed. The response is then further processed in the SSP and a bid is made for a placement, which may then lead to a webpage impression and ideally to a click.

For more detailed content regarding bid calculation please have a look at the blogpost by our Data Science colleagues.

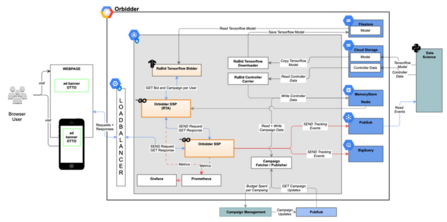

Architecture flow map

Figure 6: Architecture flow map

Kubernetes

The Kubernetes cluster consists of individual node pools in different zones to meet the different services requirements (CPU vs. RAM).

Redis

Our application cache holds data between 1 and 60 seconds, after which it updates itself with data from the Redis.

Cloud Storage

Cloud storage is used for easy file exchange as well as for storing and archiving data.

PubSub

This is a message broker for transferring bids, impressions and click events, as well as making campaign

modifications.

BigQuery

A data warehouse for permanent data storage to improve models and algorithms, as well as to reconcile revenues and expenditures.

Filestore

Given that in GKE we can't pod-mount a storage that simultaneously reads and writes, we use Filestore (NFS) to

enable this – it's necessary to exchange TensorFlow models without downtime.

Prometheus / Grafana

Our Services generate metrics in real time; these are then stored in Prometheus and visualized via Grafana.

TensorFlow Serving

In order to run the Data Scientists' models operationally, these models are exported with all required data and then requested with TensorFlow Serving via REST with bid requests.

Orbidder – key facts and figures

• 71 billion requests were handled by the Orbidder SSP in October 2022

• On average, Orbidder processes between 25,000 and 30,000 requests a second

• 100,000 requests per second is the maximum logged throughput of requests to the Orbidder SSP

• The average request processing latency 15 milliseconds

• The Orbidder GCP Kubernetes cluster logs around 1,000 service instances (pods) in a given day.

Orbidder & sustainability

Otto aims to operate climate-neutrally by 2030. Here's a great explanation of the Otto Group's climate goals.

We always try to keep these goals in mind at Otto BI and therefore also with the Orbidder. While it's difficult to influence CO2 emissions on the frontend side, e.g. in an Internet browser when displaying online advertising (and even to make them measurable at all), on the SSP/DSP side in the backend we can consciously choose to apply sustainable technologies.

The Google Cloud platform offers a broad spectrum of options to make services sustainable, e.g. when selecting services it is always possible to see how much CO2 is caused by a specific technology or service. On average, the Orbidder components cause around 1.6t CO2 per month. Is this a lot or just a little? According to some studies, this corresponds to the CO2 emissions of just 6 people every single day.

If we leave the fundamental discussion about the necessity of Internet advertising to one side for a moment, these are already comparatively good values. Our services run at Data Centres in Belgium which record some of the lowest emissions values in Europe. Nevertheless, we are constantly streamlining Orbidder's arithmetic operations and thus consistently reducing its resource consumption.

Want to be part of our team?

2Comments

- 06.06.2023 18:15 Clock

Interesting read!!!

- 06.06.2023 18:19 Clock

<>

Written by

Similar Articles

- Team JarvisNovember 04, 2025

Learning to Rank – To the Moon and Back(-propagation): Deep Neural Networks That Learn to Rank What You Love

012Learn how OTTO optimizes search relevance using Learning to Rank – and the role Deep Learning plays for our Data Science team.Development  MarcoJuly 30, 2025

MarcoJuly 30, 2025Open Source at OTTO: Transparency, Community & Innovation

04Find out how OTTO lives Open Source – for more transparency, developer satisfaction and digital sovereignty. Read more about our strategy now!Development