What is the article about?

In the previous articles we talked about what we want to achieve with Learning To Rank (LTR). In this article we will provide some insights into how we built the judgement pipeline, which is to serve as a basis for future LTR pipelines. What does a judgement pipeline do in the LTR context? It computes judgements based on user data for a certain timeframe. These judgements are to serve as the labels a ranking model will be trained on. Right now we also export these judgements for live validation on selected queries.

Introduction

The goal in this blog post is to answer the following questions:

- What should this pipeline do?

- Where did we start?

- Where are we now?

Let’s get the first question out of the way and think about what our final pipeline should look like. The complete pipeline would consist of the following steps:

- Gather user data from otto.de

- Bring it into a format that contains only necessary data (This step also makes our data GDPR compliant)

- Use the data to compute judgement scores

- Provide our judgement-based ranking in a format that the search engine understands

- Push it into our search engine (Solr) to test it in the live otto.de search

When we go live with LTR, the output of step 3 would be fed into our ranking model for daily retraining.

How did we start?

We did a proof-of-concept phase for LTR using a very big EC2 instance that could handle all these requirements. Then, we built scripts for every single step, which we executed via a simple orchestration script. This was somewhat flexible, but reliability and scalability were nowhere near where we wanted them.

Since the results of our POC were promising, our next step was to build a flexible and scalable pipeline in AWS.

We wanted to move from POC straight to a ready-for-production pipeline. Therefore, we decided to do an AWS Prototyping during which we got support from experienced consultants.

The result is a flexible and scalable pipeline, enabling fast iterations in a straightforward way. In the next paragraphs, we will describe what this pipeline looks like.

Judgement generation pipeline

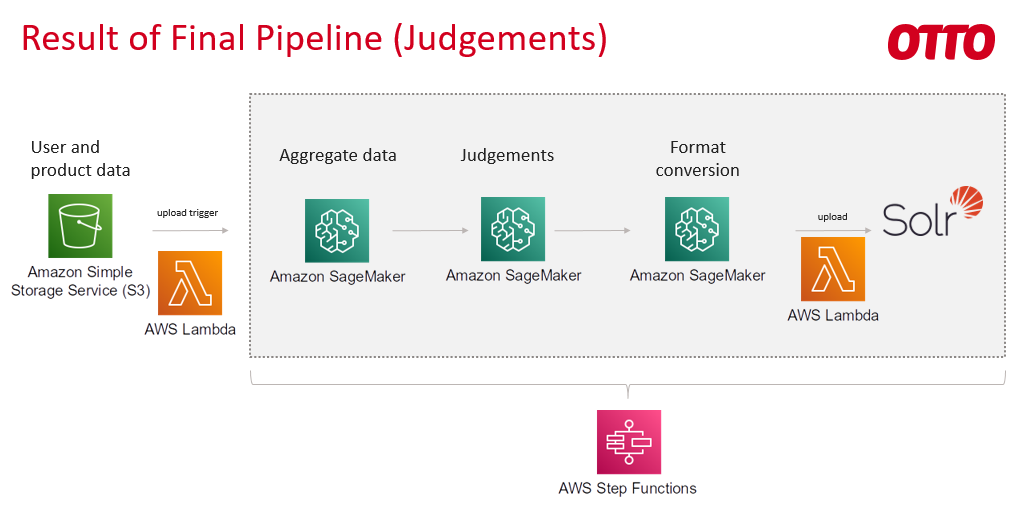

Let's take a look at how our services handle the necessary steps for our judgement pipeline. We orchestrate the steps via dark knight, an AWS Step Functions workflow:

- The first step is to extract the relevant information from the clickstream that enters this pipeline. With emo we can provide a timeframe and the service will then output all query/product/position tuples with the corresponding view, click and A4L (Add to basket or add to wishlist) information.

- Based on this information and our current judgment formula judge-dredd calculates a judgement score per query/product/position tuple.

- Solrizer then creates an XML file with product rankings based on our judgement scores per query. We can select the queries via sampling, to control how much traffic the judgements will receive during an experiment.

- Finally, solrizer-upload publishes these rankings to the Solr, so we can validate our rankings with real customer feedback.

What follows is a more in-depth look at the technology our services and the pipeline use.

How did we build it?

Most of the devs in our team are fine with Python so we chose Python as a main language. If we have to transform a large amount of data we use Pyspark. We firmly believe in the infrastructure-as-code approach to build a sustainable and maintainable cloud infrastructure. Most of our infrastructure is deployed via terraform, for the more intricate orchestrations we use AWS Step Functions.

In general our services are AWS Sagemaker Processing Jobs which run on an Elastic Map Reduce (EMR) cluster. This allows us to control processing times and costs by simple scaling the cluster size up or down, depending on our needs.

To orchestrate our services, we built a workflow graph in Python via the AWS Step Functions Data Science SDK. This enables us to change any parameter of any service by simply editing a json file and uploading these changes without needing to redeploy the entire pipeline.

Let’s take a look at a JSON snippet which configures the solrizer step in our pipeline:

{

"solrizer": {

"job_name": "solrizer-{job_id}",

"input": {

"relevant_queries_file": "s3://path",

"tesla_bucket": "bucket-path",

"judgements_path": "s3://path/{context}/{version}/{job_id}/output/judgement_scores.parquet"

},

"runtime": {

"execute": true,

"top_offers_per_query_limit": 100,

"min_variations_per_offer_warning_threshold": 1000,

"instance_count": 1,

"instance_type": "ml.m5.4xlarge",

"filter_by_availability": false,

"judgement_col_name": "weighted_click_judgement_score",

"image_uri": "ecr:latest"

},

"output": {

"config": "s3://bucket/temp/{context}/{version}/{job_id}/config.json",

"path": "s3://bucket/temp/{context}/{version}/{job_id}/elevate-judgements.xml",

"logs": "s3://bucket/temp/{context}/{version}/{job_id}/logs"

}

}

}The code snippet describes all necessary parameters for the solrizer step in our workflow. The JSON consists of 4 fields: job name, input, runtime and output. The job_name is programmatically set to the id of the entire workflow to make it easier for us to check single steps in the AWS Console.

Within the input field, we determine all necessary data sources this step requires. Note how the judgements path is context sensitive, so this step automatically pulls the correct data for this workflow id and version.

Through the parameters set in runtime we can easily toggle single steps on or off, set feature toggles or change the instance size for the corresponding step. The image_uri value describes which version of the docker image should be used, enabling us to quickly select different versions of our code for this step.

The output field tells the “step” where to log information about the run and where to save the resulting data. We regularly make use of this by doing ad-hoc analyses of the output of steps in our pipelines. It also allows us to re-run only parts of the pipeline using data that different runs provided.

Usually, this output path is then the input path for the next step in the workflow.

The complete config for a dark knight run then consists of a chain of configs just like the one above. As soon as one finishes, the next one gets triggered and so on.

That’s basically it 😉

Conclusion and Next Steps

With these concepts we built a data pipeline that is scalable, flexible and robust. We are quite happy with the result 😊

We learned a lot about the functionality certain AWS services can offer and about what other services exist. Since building this pipeline we have gathered considerable experience regarding this kind of infrastructure and so far, didn’t have any major issues.

Still, it was not always as straightforward as it could have been. Even AWS services do have limitations. Some are relatively new or are in active development. All problems we faced were solved quite quickly though; either due to our very good support, thanks to thorough documentation or by perusing the code itself.

What’s next?

A big next step will be to bring our entire LTR pipeline live. We're eager to adapt all we learned while building the judgment pipeline and apply it to our first machine learning pipeline. It should be as scalable and flexible as our data processing pipeline is right now.

If these topics sound interesting to you and/or you have experience in building ML pipelines, we would love to exchange ideas with you or welcome you to our team -> simply contact us at JARVISttd.

0No comments yet.

Written by

Similar Articles

Team PITAugust 13, 2024

Team PITAugust 13, 2024About the development of genAI assistants AskARev and Searchbuddy

04Find out (in a very special way) how the PIT team at Otto Group data.works developed the generative AI-based products AskARev and Searchbuddy.ArchitectureDevelopmentOperations Katharina MariaJuly 24, 2024

Katharina MariaJuly 24, 2024Why technical writers can rescue us

07Find out how technical writers make complex software understandable and accessible and create standards and centralized knowledge!DevelopmentOperationsWorking methods