What is the article about?

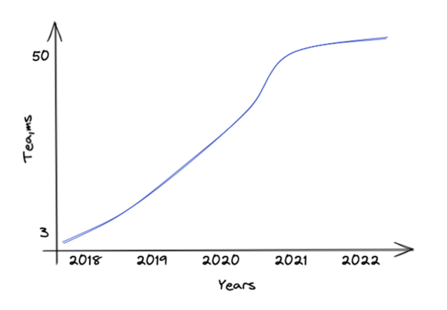

When we launched our platform implementation back in 2018, we jointly committed to creating state-of-the-art software aligned with thoroughly modern and proven practices. First off, we deep-dived on modern software architectures including Event Driven, Microservices, Distributed Systems, Cloud Native and DevOps – which resulted in a couple of positive architecture decisions.

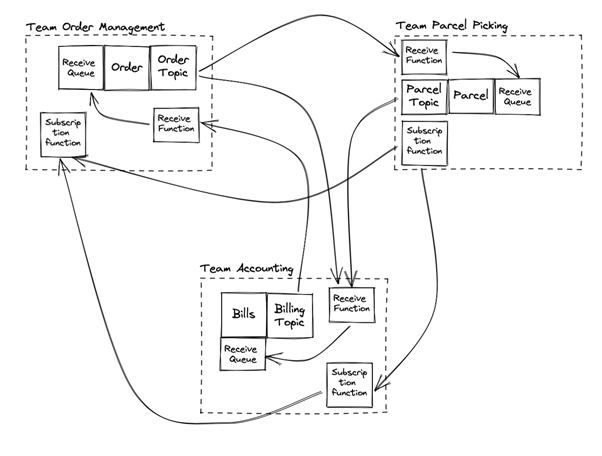

We often describe our own architecture as “complex, but manageable”. As a fundamental design principle we follow consumer-initiated subscriptions, with responsibility for subscriptions resting with consumers. Producers offer interfaces to consumers; the consumers decide on their own what to consume and which data to filter.

AWS Event Driven architecture

When we started implementing the abovementioned architecture in our system, we were fully committed to a single cloud provider – AWS. Using AWS SNS for distribution events and AWS SQS for consumption events is the best fit for supporting this architectural approach.

As a sidenote, we strongly believe in the core AWS credo of “You build it, you run it!” This means each team manages its own set of AWS accounts without central operations or SRE teams. Therefore, producers have to allowlist their consumers’ AWS account IDs. This allowlisting approach never felt quite right because it required extensive manual interaction. It was acceptable, though, as all our internal code is under version control in GitHub and consumers can either create PRs (Pull Requests) or get in touch with producers directly via chat in order to get allowlisted. After the consumer subscribes, terraform handles it on each run in a CI/CD pipeline to keep the connection up and running.

This solution has some other complications besides subscription management, however. If data-loss occurs (which is pretty rare), a consumer needs to interact with the producer in order to replay events. In our case, teams often chatted via our collaboration tool to recover missing events from a specific timepoint. This naturally leads to major effort by both the producer and consumer.

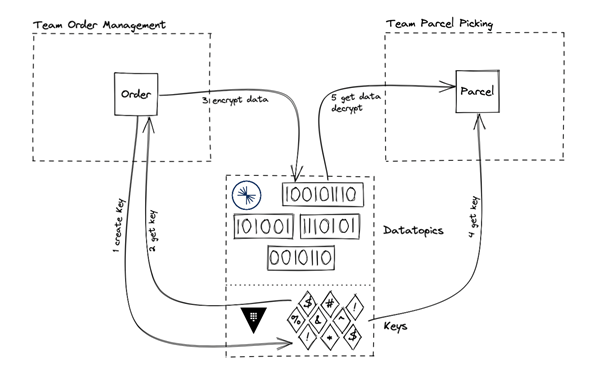

The payload itself is transparently encrypted using AWS KMS and this satisfies the main data protection rules that ensure GDPR compliance. Whenever we store data for longer than, we also implement field-level encryption of all PII Data (personal data such as names, birthdays, addresses etc.). While sharing access to queues and topics within our exchange system without central key management in place, we need to share encryption between teams on the fly. We considered different ways of exchanging keys or access keys between teams, but didn’t find a really satisfactory solution. As a result, we agreed not to store PII data on queues and topics for longer to avoid having long-term data storage here. An added plus is that this makes the usage of dead-letter queues nearly impossible.

Multicloud challenges

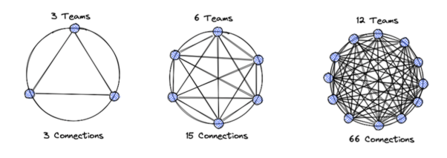

When we needed to tackle Multicloud in 2020, the complexity of message exchange rocketed. AWS SQS can’t consume directly from Google Cloud Pub/Sub Topics – and vice-versa. To work around this we implemented serverless functions (like here and here) for both flow directions. These functions are HTTP-based and make event-delivery possible between both cloud providers. When it came to subscription management we once again identified a lot of manual work: consumers had to handover their HTTP endpoint to producers – and the producers had to name the identities of outgoing topics (such as AWS ARNs for SNS) to ensure consumers could validate the event source. Again, very dissatisfying… but instead of beginning to reduce complexity, in fact we began to add a further layer of logic concerning subscription management: each team had to create an endpoint where consumers could initiate their subscription and check whether the subscription exists. Well, this worked – but as these code snippets were not often used, and with authentication and authorization further clouding the big picture, it was getting hard to understand what the code was really doing. We felt like we needed a simpler solution!

In mid-2021 we began to think about how to resolve the main pain-points on the existing event-exchange data platform. Our goals were to achieve:

- event distribution directly from Spring Boot Containers to topics

- consumption based on technologies such as Spring Boot, or with serverless functions (AWS lambda or GCP cloud functions)

- discovery for subscribers on which topics and events are available

- automated (i.e. non human-interactive) subscription management

- encryption for the event payload without exchanging IAM entities.

As a highly diverse working group of developers, tech leads and architects we began to investigate different cloud-agnostic event-exchange platforms. There’s no space here for me to go into which different possibilities we evaluated (feel free to reach out ;>) and naturally I’m aware that all solutions have their pros and cons. That said, Apache Kafka looked most promising for us as the industry’s leading event-stream processing platform over recent years. Apache Kafka has great built-in features such as replay (by resetting the offset of a specific consumer group), it has great throughput – much more than we ever expected – and offers client libraries for the most-used languages and frameworks. To stay aligned with our tech manifest, we were more than happy to find a company that offers a managed hosting service for Apache Kafka: Confluent.

Apache Kafka – our future data-exchange platform

With all these benefits in place, we began to experiment with Apache Kafka hosted on the Confluent cloud to validate our expectations. And this experience was a blast! Kafka is so simple to use that our developers fell in love with this event-stream platform even during the experimentation phase. It integrates seamlessly with the other technologies we use, for instance Spring Boot, and offers most of the missing features that we complained about in our SNS/SQS Pub/Sub environment. We quickly felt like this is our future platform – it’s cloud agnostic and easy to implement. Two aspects were still missing, though. #1: Confluent does not offer any self-service options from the product-team perspective. #2: as we were struggling with topic-related PII data, we wanted an extra layer of security and to be able to encrypt fields in the payload.

Coming back to #1, you are either an organization admin and manage the whole Confluent account, or you have no access rights to the platform. We were more than happy that our friends over at Hermes Germany had already addressed this problem and implemented a DevOps Self-Service Platform for Kafka called Galapagos. Galapagos implements the missing part and gives access to product teams to create topics, achieve transparency on events provided by other teams, offer a Schema Registry, and manage subscriptions.

To solve issue #2 (on-the-fly field-level encryption) we had several meetings with the engineers at Confluent. Their Accelerator can handle the encryption/decryption task – but doesn’t offer a platform on which product teams can share access to encryption keys. The Confluent Accelerator offers connections to different KMS (Key Management System) solutions such as AWS KMS or GCP KMS. Here too, we were looking for a cloud-agnostic answer. Happily, Hashicorp Vault (https://www.vaultproject.io) fits this role beautifully: it’s cloud-agnostic, can connect to all cloud providers and is already an implemented option within the Accelerator. As the team which manages the Confluent account and the Galapagos instance had already gathered extensive experience in running Hashicorp Vault, it was a no-brainer for us to choose Vault as our externalized KMS.

In a nutshell, our data-exchange looks now like this (simplified and with fewer teams and connections than in real life):

Migration planning and scaling capabilities for sustainable software development

Our overall developer experience with Kafka so far is light-years better than using a self-made serverless data-exchange system. Deciding to migrate more than 50 teams to Kafka was the tough route to go, as business-side management did not understand the benefits of a cloud-agnostic system out-of-the-box. From our side, though, we do understand that technical migrations should always support business goals. Confluent Kafka will speed up development cycles and we are very confident that it is far more robust than a hand-made solution. We strongly believe in low-maintenance software that is easily adoptable and can react quickly to business requirements. Kafka definitely supports us on our journey towards this – and also gives us the confidence to implement new functions quicker, because we can avoid implementing many non-functional requirements (e.g. replay, encryption) ourselves. Kafka scales way better than most other data-exchange platforms, as it does not use HTTP for data exchange and offers blazingly fast throughput out-of-the-box.

We are super-excited to see how we evolve with Kafka in the future!

Want to be part of the team?

0No comments yet.

Written by

Similar Articles

FabianApril 17, 2024

FabianApril 17, 2024Confluent Helps Power a Diverse and Scalable Online Shopping Experience for OTTO

06Find out how OTTO is taking its 10-year-old data infrastructure into a new era with the event streaming platform powered by Confluent and making itself future-proof.DevelopmentOperationsWorking methods Christian, Jürgen, Konstantinos, Tobias, TungNovember 15, 2023

Christian, Jürgen, Konstantinos, Tobias, TungNovember 15, 2023Dagster: Data Engineering Best Practice during Slack Time

011In the inc(AI) team, we are always trying out different tools and methods. Slack time has proven to be a good way of doing this, in which we learn alone or together. The motivation for such Slack time naturally comes from the challenges that we have to solve during our work. Our strategy is to always look to the open source community first to find suitable solutions for us. Some time ago, we also investigated a new tool called Dagster in our Slack time.DevelopmentWorking methods